Introduction -

This post is part of our AI6P interview series on “AI, Software, and Wetware”. Our guests share their experiences with using AI, and how they feel about AI using their data and content.

This interview is available as an audio recording (embedded here in the post, and later in our AI6P external podcasts). This post includes the full, human-edited transcript.

Note: In this article series, “AI” means artificial intelligence and spans classical statistical methods, data analytics, machine learning, generative AI, and other non-generative AI. See this Glossary and “AI Fundamentals #01: What is Artificial Intelligence?” for reference.

Interview -

Note: This interview was recorded on March 17, 2025.

I’m delighted to welcome Jordan Harrod from the USA as my guest today on “AI, Software, and Wetware”. Jordan, thank you so much for joining me today for this interview! Please tell us about yourself, who you are, and what you do.

Yeah. Thank you so much for having me. I am, like you said, from the US. I'm a PhD candidate at MIT, as well as an AI implementation and strategy consultant. I also make content on YouTube, Instagram, TikTok – for as long as that's allowed in the United States – on AI education, AI ethics, the relationships that we have with technology as it evolves, particularly at this time.

I am finishing my PhD this year. The timeline is the thing that's a little bit in question, largely because of the precarious funding situation, for higher education at the moment. So MIT is hoping that people move out of the Institute a little bit quicker than normal.

Oh, wow. I just read where one of the universities is rescinding offers to incoming students because they can't be sure that they would be able to fund them to do the research they need to do 1. And that's a sad thing to see.

Yeah. Absolutely. As far as I know, and I'm very much not in the loop on this, MIT is not doing that. But we're also in the middle of PhD interview season. Some departments fund their students full stop. Some departments, you have to get funded by your lab. Everyone's being very thoughtful about how many students to accept, how to kind of hedge against the current landscape such that we can make sure that the people who get brought in in the fall are supported. So we'll see what happens. That's not my job. I'm not jealous of those people.

Right. So you're finishing up your dissertation. Can you say a little bit about what your dissertation topic is, at a high level?

Yeah. So I work on understanding the relationship between sleep disorders and Alzheimer's and essentially projecting whether interventions in different stages affect people's trajectories.

Oh, wow. That's really interesting.

It's accidentally timely, I guess. I think a lot of the things that I've done are kind of accidentally timely. Because there's been a lot of public discussion around the role of sleep and Alzheimer's recently, and also just the broader importance of getting high-quality sleep.

And it does remind me a bit of starting to make content. I started on YouTube when I started my PhD, so a little under seven years ago. And I was talking about AI ethics. I was talking about the human impact of these systems before it was cool. And so as things have started to progress, it's very interesting to see a lot of the things that I talk about become public conversations, and how those conversations evolve in ways that I didn't necessarily expect them to.

So what prompted your interest in AI ethics 7+ years ago?

So I have always been interested in, I guess, two things: stories and short term impact. I got interested in biomedical engineering, which was my undergrad major, with a concentration in electrical and a minor in CS in high school, because I had issues with blood sugar that didn't actually end up getting figured out until, like, two years ago. And I looked into literature in that space around, like, “What are other people doing here? What solves might there be for the things that people can't seem to figure out?”

I also partially herniated a disc in my lower back when I was 15. So I've had many a weird-for-my-age health issue. And I think I got interested in biomedical engineering – how do you engineer the human body – pretty early on, as a way of both looking for solves for my own issues, but also kind of a broader curiosity around, like, I'm not the only person dealing with this. The human body is fascinating. I was pre-med originally. And are there ways that we can develop interesting tools that might impact people on a very day-to-day level and improve quality of life there?

And I got into data analysis probably my sophomore year of college? I thought that I was going to go into tissue engineering. But I also didn't like wet lab very much. And it turned out that I had more of an affinity for the computational side.

And I think that was also the point where I was kind of looking at the landscape and saying, you know, there's all this data that we have in the health space, and it's messy, and it's very inconsistent. And we're also not using a lot of it. And a lot of what we're using is … I don't want to say ‘clinical’ as a pejorative, but I think that there's always a balance of talking to people about their experiences and using that to think about care, and kind of the more practical, clinical side of things.

And so I had the opportunity to do some work in that space. I ended up connecting with somebody at Cornell, where I did my undergrad, who was in the AI ethics space. And she kind of introduced me to the AI space as a result.

And so I very much came in through a lens of, like, “How do we understand and help people, and how do we leverage technologies to do that?” I tend to lean into kind of a jack-of-all-trades disposition when it comes to skill sets. I'm more interested in developing tools that can help people than necessarily on one specific problem.

And as I went through undergrad, ML was becoming a huge thing, and I was still super interested in it. And so it was a little bit of serendipity where the thing that I was interested in and the money, essentially, and the research interest was heading in the same direction.

So that's how I ended up at a joint Harvard MIT program to do my PhD. So it's been a fun journey, and I think that coming in through a very people-centered lens has also been very interesting as the landscape has evolved, because particularly right now, it almost feels like we're doing a lot of the opposite.

Yeah. You mentioned AI ethics early on, and this is before the latest boom in generative technologies that started up a little more recently. And I think a lot of people, when they hear ‘AI’, they just think of ChatGPT. And there's just so much more to that. AI has been around for decades longer. And there's a lot more with machine learning and other AI techniques that are part of our daily lives, and part of our research, and part of a lot of the systems that we use. I use an analogy to an iceberg. It's kind of under the waterline. People don't always necessarily realize it's there, or understand the ethical considerations that come into play with regard to it. You mentioned medical data, and there's confidentiality, and a lot of other aspects that are important there.

Absolutely. Yeah. I mean, I think coming into it through the kind of health care lens certainly gives me a lot more appreciation for not even, you know, things like copyright and kind of the existing challenges and conversations around creators, and their data being used without their consent to develop these models; but also almost a more fundamental issue of, like, the things that you create and who owns them. And who owns, in the health care sense, things that are essentially derivative from your body.

I think those are really good lines to come into this space from, because I think it gives me a lot more appreciation for both the challenges of doing that and balancing people's autonomy over how their data and their likeness and things like that are used, with the potential benefits of leveraging AI for different things. And I say AI as the very broad term that has almost lost a lot of meaning in popular discussions. But I think that it's certainly not a lens that I see a lot of recent AI development and discourse come through. And it’s a lens that I try to make content and consult and things like that around, in the effort of hopefully, you know, moving the needle a little bit in a direction that’s more people-centered.

Yeah. That's great. So thank you for sharing all that. It sounds like you've used AI and machine learning quite a lot professionally. Have you also used it in your personal life?

Yeah. So I think that I use Claude on basically a daily basis. I also use ChatGPT, maybe not on a daily basis, but probably multiple times a week. There's, you know, the back end stuff of things like mail flagging and AI stuff that we don't necessarily think about as being AI-related.

But it's definitely a big part of my life, and I use it for brainstorming. I use it as someone who often finds it easier to kind of talk into a microphone for twenty minutes and then have a transcript, get reformatted into something workable because I can't untangle the ideas in my brain.

I think that I often, I don't know, sit in a bit of an interesting position when it comes to AI. Because I am Black, and I'm a woman, and I'm queer, and I have ADHD, and I'm autistic. And I also have chronic health stuff. And so in a lot of ways, I kind of am the avatar of the person who could stand to be most harmed by these things. And at the same time, I personally derive a lot of benefit from it, while watching kind of the greater landscape and how they can be harmful.

I also have AI note-takers for meetings, things like that. I try to lean on privacy-preserving technologies when I can. When I edit videos, I use an AI-powered tool called Descript. There's a lot of ways that it sits within my life.

But I think my main tenet when it comes to using AI is twofold. One is, “How can I use this in a way that minimizes harm as much as I can?” But then also, “How can I use this as, in a way, an assistive device to offload things that have legitimately been challenging for me due to various reasons?”

I mean, my doctor uses – I don't know what tool he uses, off the top of my head. We've talked about these things before, because I'm in the AI space. But he has tried out a few different AI scribe tools. So when I come into his office, something that I really like, honestly, is that we can sit and, like, have an extended conversation about my health and my needs and his thoughts. And it's not him, like, sitting in front of a computer typing constantly and needing to handle notes.

My program has us work inpatient for six months, in internal medicine or ICU or cardiology. And so I certainly have an appreciation for the challenges that doctors have in providing quality health care to people, in spite of the US health care system very much disincentivizing that. And so there's also stuff like that where I'm like, if we can have AI tools that mean that you can have better relationships with your doctor, and get higher levels of care, then I think that's awesome.

Yeah. It's really interesting that you mentioned AI scribes for clinical purposes. I just had my annual physical a few weeks ago, and my doctor mentioned that their organization had just switched over from one medical scribe, which they all kind of hated because it wasn't very accurate and didn't work very well. And they switched over to a new one. And she actually asked me, was I okay with her using that? And she felt that it did help her to actually pay more attention to her patients. The obvious concern is privacy, and so we talked a little bit about AI ethics, and she said she would love it if, for one of my upcoming articles, I would dig into comparing their privacy policies and what they do to make sure that patients' rights and privacy are protected, and while you're still helping the doctors with being able to be more present with their patients.

Absolutely. Yeah. No, I mean, my doctor's been great about disclosing this kind of stuff. And I go to a concierge practice, so I have the privilege of, by default, having a higher level of care than the average person does. I would argue the level of care that I get is what should be standard within the US health care system, but that is not up to me.

I think that that's also a huge thing because I'm coming in and, you know, you're also coming in, with a level of understanding around AI tools and kind of how they work. And so I also know that if my parents were to go into their doctor's office, and their doctor were to be like, “Oh, we're using AI for blah blah blah”, I could 100% see them being uncomfortable with it, just purely because they don't understand the technology, and they don't necessarily know whether it's something that's going to be safe for them and represent them in the way that they want to be.

So it's very complicated. So much of it also comes down to communications, and that's not something that a lot of people are trained to do. And it's also not something that you necessarily have time to do when your hospital or whoever is switching AI tools every six months.

Right. Yeah. She mentioned that their IT director was responsible for looking at and choosing these tools for them to pilot throughout the system.

But on the one hand, I could certainly understand why people don't trust AI tools. If someone says “Here, I want to use this tool, and it's AI, with you”, especially members of the Black community because the medical industry has not really been very kind, and tools are known to be biased and not to recognize, you know, bruises on Black skin correctly. And there's just so many aspects where it's not right, and it may end up causing them additional harm, as opposed to “Do no harm”, right?

Yep. Yeah. Absolutely. I mean, I think, it makes me think a lot about, like, I guess this would have been probably over Thanksgiving-ish. My grandparents are 93. They're still moving, walking. I will call them, you know, on a weekend, and my grandma picks up. And she's like, “I'm busy. Can you call me back in, like, ten minutes?” And I'm like, “This happens every time I call you. Like, where do I need to, like, send you a Calendly invite? Like, what's up?”

And so they are, you know, still doing great, still moving around. But my grandfather fell, I think, over Thanksgiving, and he's fine now. But he's a veteran, so we went to the VA. And it's definitely a thing, particularly in the Black community – I would imagine other kind of marginalized communities – where you get there, and my mom was like, “Yeah, we're going in the room with him because A, you want to have a witness, essentially, someone else who can be in the room to be aware of what's happening. And, B, you want someone else basically there to take notes, to scribe for the patient, in a lot of ways.

And so something that I haven't seen a lot in AI medical scribe technologies – maybe someone should build this – is something where there's a patient-end access where you can also have your own notes, or get the information that your doctor is also getting. And so you don't have the formal SOAP note that your doctor puts in the system, that I know how to read, and I can understand it when I read it because I have clinical training. But the average person absolutely can't.

So I definitely think that particularly in the Black community, there can be a wariness towards the US medical system, the medical system at large, in general, especially when you get into mental health. And I think that when the concerns around AI bias, and the fact that some of these systems – you know, the one that I think of is from a Nature paper a couple years ago, where the system essentially disproportionately did not provide higher levels of care to Black people. And it was optimized to look at patients and determine, based on this patient profile, should this person be escalated to personalized care? But one of the big variables they used was health care spend. That brings in class, that brings in socioeconomic, all these kinds of things where your severity of sickness is not necessarily correlated with the amount of money that you're going to spend on your health care. And so it ended up disproportionately impacting people of color and people who were poor.

It's definitely an ongoing challenge, and I would love to see more community involvement in a way that is also not exploitative of communities that you're attempting to solve problems for. Because I do think that a big problem in the AI space right now is that there's lots of people who are solving problems that don't exist.

Yeah. And especially in the medical space, there are so many problems that need to be solved. You know, if you're familiar with femtech and the way that women's health is considered in a lot of studies, even a condition that affects women more than men. Alzheimer's is probably one of them, maybe just because of our longevity. But women don't have to be included in those clinical trials 2.

Yeah.

You know, and the mortality rate for Black mothers is so much worse than it even is for white mothers 3. And that's just really appalling. But there's SO many areas for opportunity where we should be doing more and doing better with addressing the biases that are already there. Because if you just draw data from the current biased system, you're going to end up reinforcing the biases with your AI tool. And that just makes things worse, it doesn't make things better. But it has this POTENTIAL to make things better and to recognize that and to flag those and to help to mitigate them. There's an opportunity and there's a challenge as well.

Absolutely, yeah. Something that I have talked about in content in the past, and I think is particularly relevant right now, I did a video. It was kind of a rant, because it took, like, 25 minutes. But I think the title of the video was, like, “Is AI too woke?” or something like that. And it was thoughts on the ‘woke AI’ controversy that has been going on for quite a while at this point. And a lot of that centers around bias and, you know, is this thing leaning too hard into political correctness? and things like that.

And I think, A, there's often a misunderstanding of how these things work and how training data and things like that come into it, and also how capitalism comes into it. And also liability. You want something that is safe for you legally as a company when you deploy it. But something that I've also talked about in content is the fact that you're never really going to not have a biased system. You just get to choose what the bias is.

And so when we think about, something like that health care algorithm that determined whether or not people got higher levels of care, that was a system that was biased against people of color. And in order to fix that, you'd have to bias it in the other direction.

Bias is a statistical term, at the end of the day, that has become politically correct. And I try to facilitate conversations around that so that people hopefully get a better understanding of how things like that work. You can see it in the current landscape in the US around DEI and a general misunderstanding of what that includes. I don't know. I think that if people had a better sense of what it meant for something to be biased, and what it means to correct that, they likely would actually be a lot more on board with a lot of AI ethics work.

Yeah. I think it's an area that is really not well understood by most people. I think some of the awareness has started to grow because we're seeing some companies that are doing some things, or we're discovering that they're doing some things which are not really above board – stealing content, or mistreating data labelers, or so many other aspects 4 5.

There's some studies about women using AI less than men. You may have seen this study. One of the reasons that they cited was saying that women have more ethical concerns.

Yeah.

I just wrote a piece about that saying, you know, the answer to this is not to tell women to stifle their ethical concerns. The answer is, let's get more ethical tools 6.

Yeah, that disparity is very interesting to me for a lot of reasons. One of them being because I do think that the whole “Women don't use it because it's not ethical, so we should encourage women to use unethical technology” - like, what is that?

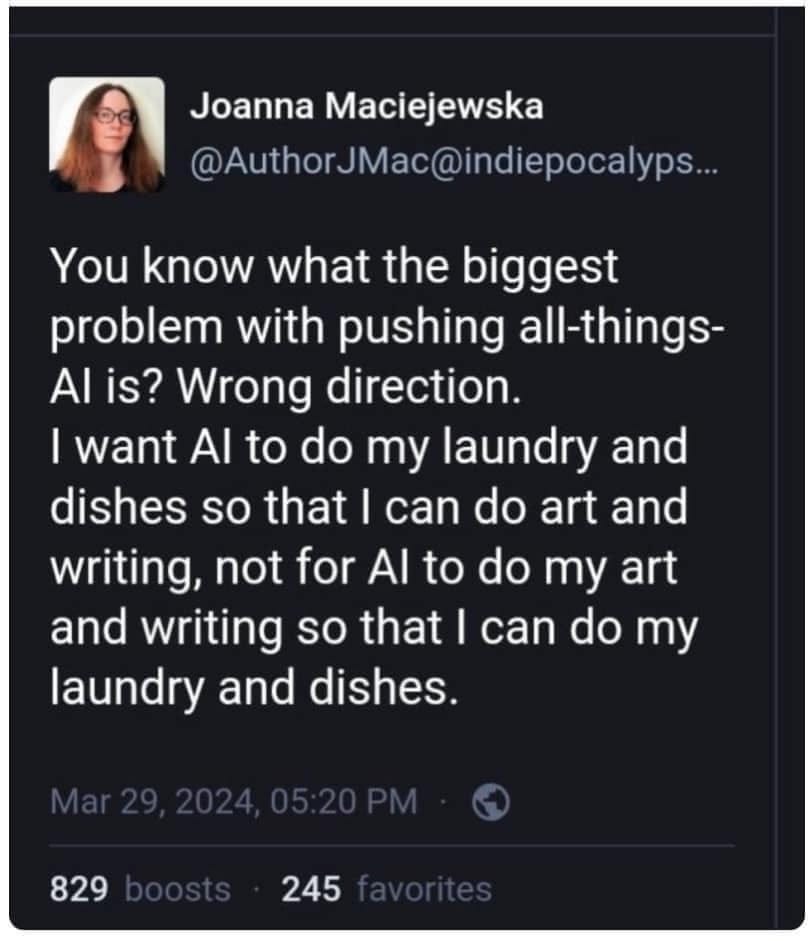

But I also think that it's interesting because it intersects with a lot of other things. So I did a newsletter a while back that originated from the Physical Intelligence, their SF start up. A lot of the people who work there used to work at OpenAI. And they posted a video that was an AI-powered robot, folding laundry 7. There's a meme that's gone around for a million years that, is basically a pull quote from an interview with a woman 8 who says something along the lines of, “I want AI to do my dishes and fold my laundry so that I can make art, not make art so that I can do my dishes and fold my laundry.” And so I saw that, and I was like, “Hey, AI, they can fold my laundry. This sounds great.”

And in the process of making that newsletter, I fell down a bit of a rabbit hole around the industrial revolution in the early twentieth century, and the fact that a lot of automation, particularly as it relates to kind of domestic tasks, actually created more work for the average housewife. Because in creating the dishwasher or whatever, creating box mix for cakes, there was this sense that, “Oh, you're not working hard enough”, or, “Oh, you're lazy.” And so that's why box mix used to be a ‘just add water’ situation, and they ended up making it so that you had to add eggs and oil, so that it felt like you were working hard enough to make a cake.

So I could 100% see something like the AI folding laundry bot becoming another one of those things where, in adding household automation, you end up actually creating more work for women. Because that disparity in, essentially, how much time the average woman spends on domestic housekeeping, versus the average man in the same household, still persists. I think it's something like seven hours a week or something like that.

I think that the ethics thing is definitely true. But I also think that women just don't have time <laugh> to get into these things and men do. And so I think that that's also one of those things that's super underrated.

Yeah. That differential of having the spare time to experiment with AI. I made a longer Substack article about that after I posted my little rant on LinkedIn, and that was one of the aspects I called out. There are plenty of other reasons why women might not use it as much, and that was one of them.

Yeah.

They're still working their double shift at home.

Absolutely. Yeah. It almost circles back to the femtech VC funding situation. It's another facet of undervaluing women's work and making it harder for women to chase opportunities that men might find it easier to chase. And that's even controlling for other factors that might limit their ability to do that.

So something that I have seen more of, which I love, is groups. Because, I mean, I'm 28. I'm technically Gen Z. I kind of ride the cusp between elder Gen Z and kind of a baby millennial. And I've seen a lot more of women in senior management, learning about AI and kind of creating their own groups around it and creating their own initiatives. And I think that's great because that's also where you see the lowest adoption of AI in corporate settings. And I would argue that's also probably one of the places where you need it the most.

Something else I'd also love to see: outside of Boston, Greater Boston area, a woman started a co-working space that offers day care. So it's designed for moms who are entrepreneurs, where they're able to co-work, work with other people in their space, while still being able to have childcare handled for them. And they're one of those groups where I would love to see them also doing more AI stuff, because I feel like that's a demographic that you don't see a lot of and could do some amazing stuff.

So the places where I am, I think, disappointed by AI tend to be the places like that, where I'm like, there are so many amazing opportunities and so many people who are doing the work to create those opportunities. And the people at the top who provide the money are not necessarily compelled by those types of problems because they're usually mostly rich white guys.

Yeah. It's interesting – the Harvard study that was saying about how women use AI less than men, they basically surveyed 17 other studies, and then they did their own study doing an evaluation with people in Kenya through some Meta ads. But the one study of the 17 that they found that showed that women used it more was by, I think, Boston Consulting Group, and they found that older technical women used it more than the men.

Ooh.

And that was the one exception. Yeah, I'll share you the link to that [study link]. I thought that was pretty interesting. You have to get into the whole study. The article’s a little click-baity.

Yeah. That's always a pet peeve of mine that I've ranted on, on Bluesky mostly, is: I think, once a month, I basically tweet, like, “Link the study in the article so that I don't have to go find it, because you probably didn't represent it correctly.” And you might have tried really hard, and I get that. But yeah, I want to read the actual study, because chances are there's stuff in there that should be highlighted, and isn't necessarily. And, typically, that's more about study design.

The Microsoft study around critical thinking and AI use is a big one at the moment. Because the way that they measured critical thinking was kind of weird. But it is one of those general things that is, I think, a broader science communication challenge that is particularly relevant during this kind of big AI push.

Yeah. It sounds like we're of the same mind, because when I published my Substack article about the article, I also put in the links to the actual studies. It's like, “Here's the full link, and I encourage you to go read this. Read the study and not the article because the study brought out some of the nuances of the systemic effects that are holding women back from taking up AI as much.” The study called that out. The article really just said, “Eh, women just need to try harder, and companies need to push them some more.” Well, that's not what the study actually said.

Nope. And a lot of the consulting work I do, I take a very bottom-up approach to it, for two reasons. One, because I think that there's, like, a general industry term that goes around, which is ‘shadow AI’, which is kind of your employees' use of AI that you don't know about. And it's typically seen as bad, which for liability and compliance reasons I get. But also, I think that there's a lot of interesting and useful information in that. Because your employees are experimenting with this, and they found things that work really well for them. And that's likely not going to be something that appears if you do a top-down push of AI in your company.

I primarily work with mission-driven startups, founders, small businesses. I think people often get confused when it comes to my public profile around content and newsletters and things like that and AI, and are confused as to how I could also do consulting in this space, for implementation and things.

And my comment is always like, “Well, a lot of the time I work with people and I tell them to use a lot less, because I'm very problem-first.” There's a company that I worked with recently. I think they have a staff of eight. Across the eight staff members, they were using 30 tools! And I was like, “Yeah, so we can consolidate that down to six.” <laugh> Like, we don't need to be doing all of this.

My best friend, actually, her company just started having them use a voice cloning tool similar to something like Eleven Labs. And the idea is that you make a presentation and then you have your voice cloned to give the presentation, and then they get sent to other people. At which point, I was like, “Well, you could just talk through the presentation instead of having to, like, write a script to give the presentation and then upload it to whatever this thing is and then have your voice like … this seems completely unnecessary. I don't understand why you guys are doing this.” And she was like, “Yeah, none of us do too.”

So it's a landscape that often does not address real problems. And I think that a lot of those real problems, prescient real problems, often come from women, and people of color, and queer people, and neurodivergent people, and are also reflective of the systems in which we already exist, which is often very hard to convey in a TikTok.

Those are all great perspectives. I've always been an advocate of “doing the simplest thing that could possibly work”. And a lot of problems do not need AI or even machine learning to be solved well. The simpler solutions can be easier to understand, easier to explain. They can run more efficiently. And there's so many other reasons why you really don't necessarily want to use AI or machine learning for something.

I'm working on a chapter on AI and data in a book for entrepreneurs. And other people, other experts, are writing the other chapters on business law and such, but I'm writing that one. And that's kind of my point is that you want to use some data. You want to, probably, use some AI. But you don't want to overdo it. And so finding that balance is part of what you need to do, both to use it ethically and to use it wisely.

Absolutely.

So you mentioned a lot about different aspects of ethics and using AI tools like Claude and ChatGPT. I thought it was interesting that Claude is, I think, so far the only, but definitely the first, to have ISO 42001 certification, which is based on them sourcing their data ethically and developing it responsibly. So Claude gets a plus in my book for that 9. You mentioned that you use it almost daily. Could you maybe share some specific stories about how you use it, and how well it works, and maybe what doesn't work so well and what does?

Yeah. I think a lot of the time when people talk about, like, “Here's the best AI tool to use for blah blah blah blah blah”, it's very almost practical. Like, it has this feature. It has this feature. It has this feature. And for me personally, a lot of whether or not I use an AI tool comes down to effectively three things.

One is ethics. If there's something about it where I'm like, “Ugh, this is particularly bad”, because a lot of AI stuff, you do run into the ‘No ethical consumption under capitalism’ problem. But if there's something that I look at and I'm like, “I'm not super comfortable with this”, then that's going to be a no.

Another thing is: is it useful? Does it fit into my workflow? Is it something that solves a problem that I actually have, in a way that is actually helpful for me?

But the third is kind of vibes, and I think that Claude really gets me on the vibes. The tone that it has, I kind of love. I've always found ChatGPT voice to be very annoying. And I also think that it isn't as – I don't want to say ‘good’ at writing, although that is true. But it's just not as personable, is, I guess, the best way that I could put it. And so I just enjoy the experience of using Claude, more than I do using most other AI assistants.

I do still use ChatGPT. I haven't really used Grok, but that's more of an Elon thing than anything. Gemini, I find to be a little bit wonky. As a disclosure, I do get access to beta versions of most Gemini versions. So I get early access to these things because I know people at DeepMind.

Yeah, I think what I use, and what I use it for, often does come a little bit down to, like, my personal experience with using these things, and whether or not it's enjoyable.

So when I use Claude, a lot of what I use AI assistants – and that can be ChatGPT or Claude – is for brainstorming, specifically as it relates to almost – I don't want to blame it entirely on my ADHD, but a little bit of managing my ADHD, where I have all these things. They're stuck in my head. I have what I call a ‘trigger list’, which I 100% took from some other productivity creator somewhere. And essentially, it's a list of, like, “Here are the kind of high-level categories of my life”. So that's things like home. That's things like health. That's things like PhD. That's things like content. That's things like consulting. And then under each one, there are, kind of, the projects that I'm working on. And the idea of that list is that on a Sunday night or whatever when you're prepping for the week, you can look through that list and start making a ‘To Do’ list based on what you see there.

I've tried doing that by writing by hand, in the past, and my brain runs faster than I can write. And I also will often forget something. Or I'll have a thought in the middle that it's just hard to capture from writing. And because of that, what's helpful for me is, therefore, talking into my phone or whatever for fifteen minutes, talking through each bullet point, and then having an existing prompt, essentially, that I use that takes, “We have this trigger list. We have this twenty minute transcript of a note. Let's extract all of the things that you talked about for the trigger list from that. And also anything that kind of doesn't relate to that - let's also pull those things out, and you can kind of categorize them later.”

And so I do that. All that goes into Notion. Currently, it's manually. I used to have it automatically do it using Zapier. I stopped because I was using ChatGPT for that, and I didn't find that it was very good at parsing that information. I might see if I can throw Claude in on the back end. It's one of those projects that's not super important to me right now.

But I think that's definitely a big way that I use it, because I think it's helpful as someone who gets kind of stuck in my own head and overthinks a lot. And it's also helpful as someone who, I guess I would say, often arrives at the right idea the first time and then second-guesses it. And then I forget what the right idea was. And then, you know, two weeks later, I'm like, “Oh, no. What am I gonna do? Like, da da da da isn't working.” And then I'll, like, brainstorm some stuff. And then I'm like, “Oh, yeah. I had this other idea before”. And then I throw it into Claude, and Claude's like, “Yeah. You did have that other idea before, and it's probably correct.” And I'm like, “You're right. That's unfortunate.”

So I think it's helpful to stop me from over-complicating things, but also to just get me out of my head and to the point where I can kind of get to the work that I actually find useful and interesting and things like that.

I think the other thing that I do use it for is when I work with consulting clients. I work with small businesses and people like that, but also with small business agencies.

And then there's people who follow me on socials who are interested in what I call a “3-month AI rebrand”, repairing your relationship with AI. The idea is that I have my own framework for how I interact with AI on a day-to-day level, and how I think about incorporating it or not incorporating it. And part of that is because the landscape is really, really overwhelming. As someone who exists in this landscape, who works in this landscape, I essentially stopped using AI for, like, six months, in, I guess, 2023, because I didn't like the content I was making, didn't know whether the opinions that I had or that I was kind of espousing were mine. And just needed to take a step back and get a better sense of, “What are the actual problems that I have? What do I actually think about these things? And how can I kind of reintroduce that to the work that I do?”

And so that's kind of the other work that I do with people, and then you can develop frameworks around that that help you navigate the space. Based on that, the one-on-one work that I do is going through that three-month process. For me, it was six months, but I went through it till it's shorter for everyone else.

And for companies, it's often developing guidelines that are kind of the more formalized version of that framework, and then developing trainings and company culture. And a lot of what I do is effectively change management, in a lot of ways. And I think that that's helpful for people.

And when I work with people for that, I do tell them that I have an automated notetaker. I use Granola. It's my favorite at the moment. It works on device. It does not go to a cloud. I can automatically have people sent the notes, but it is otherwise local. It is Mac-only.

And I will take those notes. And, for any particular client or project, I likely have either a Claude or a ChatGPT thread. And the idea is that, you know, for a small business that I might be working with, I've met with their whole team. I've met with 10 to 15 people. How do I take all of those interviews and pull information from them? And so as opposed to reading through them myself, reading through transcripts, things like that, it is often more efficient to just be able to upload them to usually, Claude, sometimes ChatGPT. Granola also lets you query things explicitly, which is why I use it. It also lets you do the thing of, like, “What just happened in the meeting that I was already in?”, which is very helpful for me. I will also use it for essentially project management, for the people that I work with.

So I think those are the main two ways that I use it. Outside of that, it would be editing video, largely. I did not get into content because I enjoy editing video. I really don't. I used to have an editor. I stopped working with them largely for financial reasons that were on my side, not on their side. It was because I had backed off of making content. So cash flow wise, it didn't make a ton of sense. I could 100% see going back to working with an editor at some point for my content. But in the interim, a lot of what I need to edit is pretty straightforward. And I'm not using AI-generated images or video, which is something that I really try to avoid. So it works.

Yeah, you just crossed into the next question that I normally ask people, which is about when you've avoided using AI-based tools. You mentioned just now that you don't use them for images and you try to avoid that. Can you talk a little bit about that?

Yeah. I think I'd say there's two reasons why I largely don't do that. If I'm going to use it, it is going to be through something like Adobe Firefly or through some other platform that has worked with the creators of the training data to make sure that they have opted into their content being used this way, and that they are being compensated in some way.

Adobe is one company that does this. Canva is another company that does this. In Canva's case, creators are compensated for having their content added to the stock system. I don't believe they're compensated for the AI use on top of that, but don't quote me on that. But if I'm going to use AI-generated content, it's going to be from that.

But outside of that, I just don't think that it's right for me personally, to create an AI-generated video based on the artistic works of other people. I guess I would say, there's kind of the moral high ground level on which I don't do this, and then there's the almost vibes level on which I do it.

And the vibes level is that I feel like - there's actually a company right now. I can't remember what their name is, but I think they're Y Combinator backed. And they are an AI generated content production studio of sorts. And Curious Refuge is another. I think they just got acquired by this company, actually. But they are both companies that create AI-generated short films, commercials, advertising, as well as teach people how to do that. And I find that AI-generated video, in particular, looks bad. I think it comes with the ‘uncanny valley’, certainly. I can't articulate what it is about it. I just look at it, and I'm like, “No. It just doesn't look good. I don't like it.”

And so I think that even if I didn't have the ‘moral high ground’ level, I would look at it and be like, “Eh, I would rather just pay for quality content than look at this, which is … weird. It's, like, shiny. I don't understand it.”

Yeah. I know a lot of people have different objections for different reasons. Some people, like you said, it's the ‘uncanny valley’ sensation of this picture. There's just something off about it. We can't always name it or describe it, but it's sort of the ‘we know it when we see it’.

And there are also communities. You mentioned that you're on Bluesky. I need to go find you there if I'm not already connected to you! But when I first joined Bluesky, I had this avatar generated on my phone, and I used that as my profile picture. And I had some people tell me right away, “Okay. It looks like you use AI art, and that's frowned upon here. You’ll get yourself on some black list. You need to really put up a real picture.” Okay. Fine. I did.

But some people have very vehement objections. There are people I see on Substack who say they won't read an article if the post picture is clearly AI-generated, because they have this, like you said, sort of shiny or this sameness that is a big tell to some people. And some people attribute it to being lazy. I think it's actually probably sometimes quite a bit of work, so I don't know that it's being lazy. But they just say “If their artwork isn't their own creation or a human's creation, their writing probably isn't either, and why would I bother reading it?” So there's a lot of different attitudes toward what we perceive to be AI-generated content, and some people just “I want words written by humans.”

Yeah. I feel like a lot of my content in my work is being the queen of nuance. And I often find myself a little bit frustrated with people who are very anti-AI for that reason. And I think that some of that comes down to - I mean, I do generally think that, particularly on Substack where you can get the stock images from Unsplash or whatever, as part of the thing - like, you don't need to generate AI content. Does it really help you? I feel like it doesn't.

So I have an AI use disclosure at the bottom of my newsletters. And typically, that disclosure says something on the lines of “I dictated a bunch of stuff into my phone. I use Mac Whisper.” Apple voice notes now automatically transcribes your work. I took that. I probably threw it into Claude. I have a very detailed custom prompt where a lot of it is like, “Keep my voice. Do not change my voice. I want it to sound like me.”

And that will be, I guess I would say it's a first swing of a newsletter. What I then end up doing is we basically have one window with that open, and then a blank draft in a different window. And I look at that, and I'm kinda like, “This didn't totally get what I wanted. But now that I have something to bounce off of, I can start writing.” And I also have now all this reference material stuff that I was already able to dictate.

So I do think that when it comes to writing, it's not quite as black and white as, like, “you should never use AI in your writing”. I just think that how you use it is very important, whether or not you're capitalizing on someone else's work and someone else's voice. And, obviously, if you're fully generating your newsletters via ChatGPT, then, like, no. But I definitely see that, and I get it.

I personally find it really helpful. I think that there's more nuance in the space. I also think that you can get into a little bit of an ableism territory, when it comes to being that anti-AI very quickly.

But I think that those are the things where I think people have very knee-jerk reactions to things. And I get that, because I do that. Everyone does that. And I think that when I have those kinds of reactions, where I'm immediately, like, “Oh, I feel very opposed on almost a personal level”, that's usually a moment for me to take a step back and be like, “Why do I feel this way? Is it actually about morals or whatever? Is there something else happening here?”

And I think that a lot of AI discourse can kinda benefit from taking that step back and understanding where that comes from. And also I often think that, or at least I've found that, a lot of people who have that knee-jerk reaction, are people who are very black and white about these things – don't engage with the landscape. And I think that's really unfortunate, because I think that ‘knowledge is power’ and all that.

And, by opting out, if opting out to you means just, like, fully putting blinders on what's happening, then to me, that's almost worse. Because the way that you affect change in this space is by knowing what change you can affect in the first place. And that requires you to kind of keep up with the landscape in general.

And that's not an individual responsibility thing, I think. You know? Follow me on TikTok and Substack and all that. There are resources for these things.

I think that there are interesting conversations to have in this space, and that it's not always quite as black and white as, like, “This person used an AI-generated photo, therefore, you should ‘throw the baby out with the bathwater’.”

I guess my other slightly spicy take in this space is: a lot of this, and in the US at the moment, policy is a nightmare. But so much of this comes down to ownership and how we legislate ownership, and how legislation around ownership informs compensation and acknowledgment, and things like that.

And so I do think that if your complaint around AI-generated video or things in that space, is around ownership and attribution, I think it's fine to call out individuals. But I think that you could affect much more change by advocating for policies that require that at a much higher level, such that people at an individual level are not able to do those things. Or have to, you know, actively use them or whatever it is. Yeah. Because I think yelling at individual people, I don't think, is ever a particularly great way of affecting change and coalition building and motivating people and things like that. It's usually not very helpful.

Yeah. You mentioned that you made some notes in your newsletter about, you know, I use Claude for this, and I did that. I think transparency is the one thing that I think I wish for the most, and that I hear the most from my interview guests is that, if you're going to use AI, just say it. You know? “Hey, here's what I use.”

I put up an AI policy a year ago when I first got started saying, “Okay, I'm not going to use it for my writing.” Part of it is the ethics, but part of it is just that for me, writing is thinking, and I'm not going to outsource my thinking. I just find it works better for me if I just write.

Yeah.

And, grammar or other assistance, I don't always agree with them, so I don't use them that much. But at the same time, you had mentioned ableism. If I'm writing in English and someone else is writing but it's not their native language, it's very unfair for me to say, well, they shouldn't use that to help them improve their grammar. Like, go for it. I choose not to use it, but that doesn't mean I should be judging anybody else who does. I don't feel like that would be right.

So there's, I think, like you said, some nuance to this. I like the Unsplash images. I can almost always find a good one that fits what I want to characterize in my article. And so there are ethical options in a lot of cases.

And I think the bigger push, like you said, at the higher level, we need to be advocating for us all to HAVE ethical tools to use. And then we don't have those objections. Or tools that are better and not all shiny.

Absolutely. Yeah. I think it's the whole, what's the saying? ‘The moral arc of history bends towards justice’, which I believe is typically attributed to Martin Luther King, but I don't think it actually originated from him 10. I can't remember now.

But I think that it's one of those interesting sayings that, in the US is commonly assumed by kind of the center left, honestly, in that, you know, people will inherently become more progressive over time, things like that. And it's like, no. Like, things happen. Movements happen to make that happen. Like, the moral arc of history is not bent towards justice unless you bend it that way. And there are plenty of people who are trying to bend it elsewhere.

And so things like developing ethical AI tools and being intentional about, you know, how you use AI and also just, like, having conversations about your AI use and, you know, reading a newsletter like this, or listening to a podcast like this. Like, all those things are things that move the needle in the first place.

Yeah. You mentioned compensation and giving credit. There's something called the “3C's Rule”11 where creative people should have the right to Consent, to be Credited, and to be Compensated when their work is used. And not just for AI, but certainly for training in an AI-based tool system. So what are your thoughts about that? I know you mentioned ownership and legislating it, and that's certainly a challenge.

Yeah. It's one of those things that I think should be true. I also think that, I don't know, it's one of those things that you could very easily write into law. And also the process of getting it there, I don't even know how you do that. And I think that's always the challenge with, I don't know, these kinds of things where I would love to see that become a norm in this space.

And I also don't know what that path looks like. In the US, arts are typically just generally undervalued and underfunded in the first place. And so the weight behind that is limited.

I also think that there's a practical slash technical challenge of, like, if DALL-E, OpenAI's image generation model, generates an image, what does it look like to compensate the artists that were involved in creating that image? That gets a little bit into, like, algorithm development and the fact that you can't necessarily, unless you say, you know, “I want X Y Z image, developed in the style of Degas” or something like that. It's otherwise quite hard to attribute ownership in that way. Because there isn't really, like, a 1-to-1 link of, like, “You used X training data, and it resulted in this pixel looking like this”. Like, that's not really how it works.

So it's one of those things that I definitely would love to see. I think that honoring people and their work is really important, and something that at a high level, we should value and actively advocate for. And then I think there are also just the practical constraints of, like, “Okay, well, what does that look like, in the first place?”

Yeah. You had mentioned Adobe and Firefly and them getting permission for the sources that they use. I remember when they first announced their new tool. I think it was a year ago, February. And wow, they're saying that they're only using these ethical data sources, and that's really awesome. And then it came out that they used, I think, 5-10% images that were generated by Midjourney.

Mmhmm.

Which kind of polluted their claim that everything that they generated was enterprise safe, and you don't have to worry about infringement or licensing; because now you've polluted it with Midjourney.

Yep!

And I also had interviewed a technical artist who was a long-time Adobe user, and his content in the files was used without his consent. And he never got compensated. And so he said, well, Adobe SAYS they're doing this, but they really just took advantage of all of their paying customers for years, and took advantage of using their artwork. And so now that's being used to compete directly against him.

Yep.

And people are generating images, and they're not hiring him any more for his business of twenty plus years. It's basically gone down the tubes.

You know, it's great when we find companies that are trying to do the right thing. But I think we need to keep the spotlight on them and just make them aware that they can and should do better. Of course, some of them don't even try, which is even harder.

Yeah, yeah. I think the most ethical option is often still not particularly ethical, because of the landscape that we exist in. And I also have noticed this is actually something that, because I'm a YouTuber and so I'm in the YouTube space. I don't know that I've heard this from Adobe in particular, but there are a few other content licensing companies that I've heard of this happening with, where a creator will be like, “Oh, I pay for access to the library that this company offers. I found this video that aligned with the thing that I want to showcase in my video, so I used it in my video.” And then my audience was like, “I can't believe that you'd use AI-generated content. This, like, totally changes how, you know, I think about you as a creator, blah blah blah.”

And I’m there like, “Wait. I didn't?” At least I didn't think I did. Because that's WHY I went to this platform to get stock footage made by people. And then it turned out that that platform had the kind of AI slop problem of AI-generated content leaking into their platform, and they didn't know it. And that does very much feel like a bit of a due diligence issue of, like, “Okay, well, how did this end up here and you didn't know it?”

But I also think that keeping more pressure on companies is definitely the correct thing to do in this space. I can also understand the ongoing challenge that is: How do you develop systems that can identify AI-generated content when you are always developing a system that is trying to catch up with the latest thing?

Generative adversarial networks were the initial example of this from an architectural standpoint, where the idea behind a GAN is that you have one model that is generative. It generates content. It starts with white noise effectively. And it tries to fool the adversarial model, which has a sense of, like, “here's the output that I'm expecting”. And you go back and forth, and back and forth, until the generative model is able to fool the adversarial one. And I think it's an interesting ongoing challenge. You have these tools that are generating new content, and you need these adversarial systems that can identify them. But the adversarial systems are always kind of playing catch up with whatever we're generating anew. So I can appreciate the challenge that companies, who are trying their best, come across when it comes to these things? And, also, I think that a lot of companies could be trying much harder.

Yeah, you mentioned the adversarial tools. I don't know if you've heard about these tools called Nightshade and Glaze that some people are using to try to basically poison their online images so that they look fine to the human eye. But if an AI tries to train on them, it'll end up thinking a dog is a cat or something like that.

Yep.

But then it turns into a bit of an arms race, where the people who want to use those images anyway are going to try to find a way to overcome the poisoning.

Yeah.

And then the poisoning tools get better. So it's a bit of a chase there.

Yeah, and this is something that I have to remind myself of, on, like, a daily basis, as someone who is in this space. And my friends are not necessarily in the AI space, but they are people who have the time and the resources. And I don't think you need to go to, you know, an Ivy League school to have the education slash critical thinking or whatever to be able to integrate these things. But I think that having access to that can facilitate it. And if you don't exist in a system that effectively imposes it on you, then, you know, you don't necessarily know about it, and you might not have the incentive to do it.

I will look at things in the AI space and see something like Nightshade and be like, “Oh, this is awesome. Ideally, this will affect a lot of change.” And then I usually have to take a step back and be like, “How many people are using this relative to the wider training data, to the wider kind of artistic community?”

Internet bubbles are a thing that has been around, I don't know, for at least, like, fifteen years now, if not more. But I think that's one of those things where it's very easy to look at something in the AI space and be like, “Oh, I think that x y z should happen even with artists.” You know, there are many people who are like, “I strongly disagree with my art being used for blah blah blah.” And they're not necessarily aware we're hearing from people who disagree with that.

And so it seems like that stance is self-evident, and it seems like something like Nightshade is inevitable. And it's not, necessarily. We already live in this weird multiverse of people existing in different realities and having different factual bases, so to speak. I think it's hard to acknowledge this on, like, a day-to-day, minute-to-minute level, and, like, understandably so.

But I think that that is a big underrated part of anything from, you know, ownership to copyright to ethical technology development, particularly in the West. Like, we live in a capitalist society, and so you can develop these amazing tools. And they can solve a real problem. And if no one hears about it, if no one gives it the money, if people don't value it, it's not going to take off, and you might not hear about it in the first place.

Yep. Yep. That's fair. So one thing I'm wondering about: As members of the public or as consumers, our personal data and content has probably been used by AI-based tools and systems. Do you know of any cases where your data has been used that you could share, obviously without disclosing any personal information?

Oh my gosh. I mean, probably. I feel like there's the easy examples of being a relatively public figure. I remember when my YouTube channel was, like, very small. This was in, like, year 1 or 2. I used to live in Kendall Square, which is where MIT is. And I was waiting for the train, for the T. And somebody ran at me yelling my name, and I was like, “Oh, I'm being attacked.” And it turned out it was someone who watched my YouTube channel, and they were really excited to see me. And I was like, “That was terrifying. I'm glad you like my content, but please don't do that.”

I'm like a very minor public figure at the end of the day but still exist in that space. Fake profiles of me, fake channels of me are super common. And so there's, not even at the AI level, that persistent issue of people taking my likeness and my data and using it for their own profit and gain. I don't think that that's something that the average person really relates to. It's an occupational hazard that I kind of knew going in.

Outside of that, I'm sure there are other ways - data leakages, things like that. I had my identity stolen last year. That was a lot of fun. I don't know whether that had anything to do with my public profile. It probably didn't, but that was a good time.

Outside of that, I'm sure my data has been used by other people to profit for their own gain, whether it be voice cloning or deep faking or something in that space. I think that I'm probably a little bit more numb to it than I should be.

I think that the job that I have means that I expect that on some level. And the content that I make certainly advocates for people to be very sensitive to it and to push back against it when they can. But for me personally, there is a level on which it’s kind of like, well, there's hundreds of hours of video and my voice that anyone could use at any time. And I could go after them for using my likeness. But, like, at what point is it necessarily worth it? Who knows?

So the short answer is yes. I'm sure it has been. I think the medium answer, compared to the longer answer I just gave, is: it's kind of my job to be exposed to that.

So one last question, and then we can talk about whatever you'd like to add to the conversation. People's distrust of these AI tech companies has been growing. And partly, it's because we're finding out about what they're doing with our data that we didn't really sign up for them to do originally, and that's probably healthy. But if you think about all the companies that are using our data now, and the ways that they're using it, and us wanting to be able to build up a sense of trust with them, what's the one thing that you think these companies would need to do to establish and to regain our trust and keep it?

Transparency is the big one. I also think that trust is an interesting word, I guess, to me? Because for me, trusting something comes down to expectations. It's about consistency in terms of, like, you say you're going to do X, and you do X. I know that in this interaction, you will respond in a way that is consistent. And so there are many companies that I trust to do things, and do not like the things that they would do, and I would not use them.

And I think that's where transparency is a little bit interesting to me, because keeping your trust and keeping your money are two separate things. And I think that transparency is definitely the best thing that AI companies could do to earn my trust and to earn the trust of the general public. And that gets a little bit more complicated in terms of, like, how do you communicate the realities of these things in a way that resonates with the audience they're talking to?

But I also think that there's a little bit of awareness that, in developing trust and being honest and transparent about the things that you were doing, people might not like you, and people might choose not to use your product or work with you or whatever.

And this is something that I deal with as a content creator. The people who I want to follow me, I hope to gain people's trust in terms of consistency in what they can expect from me. But, like, if what you can expect from me is not something that you want to devote your attention to, then, like, “Cool, that's fine - have a good one.”

There's a study around AI literacy – there have been a few studies, but there's one that's coming to mind – that basically found that people who are not particularly AI-literate are more likely to use AI because they tend to fall prey to the hype around it, and the magical thinking. And as people become more AI-literate, they become more intentional and kind of critical of the technologies and their use and things like that.

And so I think the thing that got some people about the article that I'm thinking of in particular was the kind of conclusion of the Abstract was like, “If companies want to continue to retain consumers, they should maybe not demystify AI all that much, because otherwise, people will not buy your product.” And I was like, that's not great. But it's also true.

So I think that the most important thing that an AI company can do to earn my trust is certainly to be transparent about what the thing does, what impact that has on me, what impact that has on my wider community, and the wider world. But I also think that in doing so, I suspect a lot of major AI companies would lose their consumer base. And I would hope that that then leaves some space for other companies that you should trust, and actually use, to come into that vacuum.

Almost everybody that I've interviewed has ended up saying that companies need to be more transparent with us, not giving us 10 or 20 page terms and conditions that are written by lawyers that people can't understand. It's like, “So what are and aren't you going to do with my data?”

Yep.

And that's one aspect of it. Is there any other specific aspect of transparency that you feel like you would want companies to be more transparent about? You mentioned a few things, but just if you pick one thing, what would you want an AI tool vendor to tell you, that they're really not currently telling us for the most part?

Training data disclosures would be the big one. Model cards were some of the work that I know Joy did. I can't remember the name of their earlier work. That was basically nutrition facts for datasets. That was easier to do when datasets like ImageNet were academic datasets, and so they were open sourced for noncommercial use. You know, all these major AI companies have these proprietary datasets they don't share with everyone or anyone. But I think that understanding what goes into this thing, such that you can understand what comes out, would be one of the very big things about earning and keeping trust.

Sometimes I do research and I come across a study. I did one recently that was, like, the Center for Family Studies or something like that. And then I looked it up, and I was like, “Okay, so this is, like, center right at best, and that is the lens through which they're communicating this information.” I may not agree with them in terms of their stance. It's a similar thing with training data, where it's like, “Okay, most training data is, like, Western English language. How do I think about that in the context of what this thing is telling me?”

Yeah. That's a great example. Are you thinking of one of the initiatives from the Algorithmic Justice League, maybe?

Yeah, yeah -

. She was Media Lab. I used to work in Media Lab. She’s awesome.Oh, nice.

She does a lot of awesome work in this space. But, yes, I think she and – it might have been Deb Raji and maybe Meredith Whittaker; I'd have to actually look at the studies. I can't remember now off the top of my head because this was, like, six years ago.

But people in the AI ethics space – which, particularly in those days, tended to be disproportionately women and people of color, because of course – developed what were nutrition facts for models. Like, here are the things that you should know about this model. Here are the things you should know about this dataset. Here are the sources, roughly, of this dataset. Here are the people that were involved in collecting it.

I mean, ImageNet's a good example of this, in that ImageNet is an image dataset labeled with text, for image segmentation and things like that. And some of the labeling initially was done via Amazon's Mechanical Turk, which is a, I guess you could call it a gig worker platform. You get paid, like, 5¢ to label an image. It tends to be largely dominated by workers from third world countries where the US dollar stretches a lot further. And you would have particularly, like, people of color, you might have a Black person being labeled as a gorilla.

And so, like, knowing the cultural context behind, and the labor context behind how these datasets originate, I think, would go a long way towards earning and keeping trust, just in terms of expectations around what you should expect for these models and how they work.

Yeah. Training data transparency is a big one. That's one that people seem to have latched on to a little bit sooner. I believe it's covered in ISO 42001. And the group that Ed Newton-Rex started last year called Fairly Trained, where they certify that the company has only used ethically-sourced data: they got licenses for it, or it was true public domain, not just ‘publicly available’. And that's what they focus certification on.

But the other aspects, like the data labelers, and being exploitative about how they get images labeled and and get them corrected, even at this point, I don't think the EU AI Act covers that aspect of it. And I don't think ISO 42001 does either. So there are still some gaps. But the training nutrition cards sound like they would be a great start. And do you have a sense for why they haven't taken off?

Lack of pressure would be the big one for me. Model cards were a very academic endeavor, and industry AI development, commercialized AI development, has overtaken academic influence in this space by quite a lot over the last four years. So I think there just isn't really a lot of pressure to do that.

OpenAI does still release model cards for all of their models, I believe the last time I checked. It's a similar problem to reading a privacy policy where it's like, this is a 60-page document that if you don't have an academic background, you're not really gonna be able to parse anyway.

Yeah, so it's not like a nutrition label on food.

No. It's not simple. It's, in theory, for people who have an academic background and practice. Whether or not you want to say that this is an intentional obfuscation of the information for the average person versus, you know, they're actually releasing a detailed academic article, blah blah blah. I think it could be both.

But I think that there is no incentive to give people something simple that the average person could understand. And I will say, in slight fairness to these companies, what would be on that nutrition label, I think, would be an interesting and complicated discussion.

So they might get themselves in legal hot water by putting the truth there?

I mean, I'm sure they would. But what information do people want to know? What information is important for people to know? Like, how many people actually read nutrition labels when they go to the store? Not that many. Power users, essentially. What do power users want? They typically are people who are on diets. The equivalent of that in this space is people who are really interested in AI ethics. It's a very small portion of the population.

So it's a problem that extends well beyond the AI space. But I do think that, almost similar to – I don't remember when this regulation was passed in the US, but it must have been when I was in, like, high school – where menus had to have the calorie counts for each thing on the menu. As someone who's been in recovery from an eating disorder for years, I have many thoughts on whether or not that is actually useful information for someone to have on a menu. But that's something that you can see that might be like, “Oh, maybe I don't want that thing.”

And I think that having something similar or potentially more informative than just calories on a menu, when it comes to meals, would be a good starting point, at the very least, you know? “What percentage of this data was stolen from people who were uncompensated?” I'm sure no company is going to do that. You get the point.

Yeah. Well, I could at least say what percentage is traceable to a public domain or licensed source. You know, just because it isn't traced doesn't mean it was stolen, so they wouldn't be necessarily admitting to a crime.

Yeah.

Alright. Well, this has been a lot of fun, Jordan. So that's the last of my standard questions. Is there anything else that you would like to share with our audience or add?

Nope. You can follow me on Substack, YouTube, TikTok, Instagram. Everything's under my name, Jordan Harrod. Like I said, I do consulting work, 1-on-1 work. There's more info about that on pretty much every platform that I post on, so feel free to reach out.

And also let me know in the comments of various videos. I mean, something that I'm thinking about a lot right now is the climate side of things. So if people have questions about that, then I would love to hear it because I think that's another space that lacks nuance in very interesting ways.

Yeah. I've been reading up a bit more lately on the impact of AI and specifically generative AI on data center demand, water usage. There's some studies that apparently didn't accurately state the water usage, and, obviously, there's some changes now. The models are bigger, but some of them are like DeepSeek, so more efficient.

Yeah. Yes.

It'd be great to get a good clear perspective on where we really stand right now and if we're making any headway.

Yeah. I'm working with another YouTuber on a video about that right now. [Out now! See “Should I Feel Guilty Using AI?”] And, the short version is that it is almost a training data problem. Because companies don't release metrics on any of this stuff, because they have no incentive to. So a lot of these studies are kind of guesstimates based on information we do have. Eternal problem.

And also, whenever I post a TikTok about how AI is very helpful to me and people complain about how, you know, “Great to see that we're burning the planet so that you can brainstorm things.” I'm always like, “Yeah, the average TikTok user spends forty five minutes on TikTok a day. Actually, I think it's sixty minutes as of last year. Based on our current numbers, which are wonky, that's equivalent to about a hundred ChatGPT queries. So you could just log off TikTok.”

Yeah. There's a lot of interesting dimensions. One is the impact of the training, and the other is the inferencing and using it for a hundred queries or a hundred different activities.

Yeah.

And I think I saw other comparisons that were comparing the usage for I think they were saying 500 chat, sort of 500 questions, and then I go, what's a question? But comparing that to, you know, eating a hamburger.

Hamburger. I mean, I do think a lot of this comes down to the fact that, like, 90% of particularly in the West, your carbon footprint comes down to basically food, transportation, infrastructure. And those are – aside from, I guess, not eating red meat – policy issues more than anything else.

Yeah. Some of the bigger concerns seem to be that, for instance, in California, agriculture, where you're putting a data center there, and you're directly competing with agricultural water supplies. And so, you know, even if supply is balanced on a worldwide scale, there are going to be local areas where, “Okay, do you want to run more queries, or do you want to have food?” There's a more direct conflict in a few locations at least.

There's water loss from data centers. There's many people in my comments who are often like, “Well, the water cycle means evaporation, condensation, precipitation, so the water eventually comes back down.” And I'm like, yes. But pressure systems exist, which is why California is in a drought. And also data centers are in the US, particularly in red states, so there's no regulation of anything. So, like, it's all complicated.

Yes. Well, I hope you'll write about that in Substack as well. And I’ll look forward to your articles about it.

Yeah. It is in the queue at some point. There are many things that I have rants about in the queue.

In parallel with finishing your PhD!

Yes. I mean, gotta do something to manage the anxiety! Not really, but of the Trump administration and whatever they're doing with my graduate school. Gotta have other outlets.

Yeah. Are you at risk of not being able to finish because of some of those threats? Or do you feel like you've got enough runway that you're going to make it?

No. I'm not at risk of not being able to finish. I think my timeline has gotten a little bit weird because MIT is now pushing people to finish faster than expected. But in terms of, like, my own degree progression, it's more, “Am I going to get paid over the summer?” than it is necessarily, “Am I going to be able to defend in the first place?” I have a committee meeting next week, so we'll find out.

Awesome. Well, good luck - hope that goes well for you, and I’ll look forward to keeping up with your writing!

Yeah. Great chatting with you. Thank you for having me.

Thanks!

Interview References and Links

Jordan Harrod on LinkedIn

Jordan Harrod on Bluesky

Jordan Harrod’s Substack newsletter ‘’

About this interview series and newsletter

This post is part of our AI6P interview series on “AI, Software, and Wetware”. It showcases how real people around the world are using their wetware (brains and human intelligence) with AI-based software tools, or are being affected by AI.

And we’re all being affected by AI nowadays in our daily lives, perhaps more than we realize. For some examples, see post “But I Don’t Use AI”:

We want to hear from a diverse pool of people worldwide in a variety of roles. (No technical experience with AI is required.) If you’re interested in being a featured interview guest, anonymous or with credit, please check out our guest FAQ and get in touch!

6 'P's in AI Pods (AI6P) is a 100% reader-supported publication. (No ads, no affiliate links, no paywalls on new posts). All new posts are FREE to read and listen to. To automatically receive new AI6P posts and support our work, consider becoming a subscriber (it’s free)!

Series Credits and References

Audio Sound Effect from Pixabay

Microphone photo by Michal Czyz on Unsplash (contact Michal Czyz on LinkedIn)

If you enjoyed this interview, my guest and I would love to have your support via a heart, share, restack, or Note! One-time tips or voluntary donations via paid subscription are always welcome and appreciated, too 😊

US universities rescinding graduate student admissions due to sudden budgetary issues from reduced federal funding":

https://www.insidehighered.com/news/government/science-research-policy/2025/02/25/facing-nih-cuts-colleges-restrict-grad-student

https://www.huffpost.com/entry/universities-slash-phd-admissions-amid-federal-funding-cuts_n_67c85d82e4b06ea0f7595113

https://fortune.com/2025/03/06/university-elon-musk-doge-cuts-rescind-genz-graduate-school-offers/

Exclusion of women in US clinical trials: “How Dismantling DEI Efforts Could Make Clinical Trials Less Representative”, JAMA, 2025-04-11

https://thehill.com/policy/healthcare/5130839-maternal-mortality-rate-drop-cdc/

Physical Intelligence (laundry-folding robot): https://www.npr.org/2025/03/17/nx-s1-5323897/researchers-are-rushing-to-build-ai-powered-robots-but-will-they-work

One caveat on Claude’s ISO 42001 certification: it may not prove as much as we might hope about whether they’ve sourced & handled IP responsibly. See e.g. this 2025-01-15 article by Oxebridge Quality Resources: “Despite Being Sued for Copyright Infringement, Anthropic Obtains ISO 42001 Certification”.

Origin of quote “The Arc of the Moral Universe Is Long, But It Bends Toward Justice”

Share this post