AISW #015 Tracy Bannon, USA-based real technologist 🗣️ (AI, Software, & Wetware interview)

An interview with Real Technologist and software architect Tracy Bannon on her stories of using AI and how she feels about AI using her data and content (audio; 25:41)

Introduction - Trac Bannon interview

This post is part of our 6P interview series on “AI, Software, and Wetware”. Our guests share their experiences with using AI, and how they feel about AI using their data and content.

This interview is available in text and as an audio recording (embedded here in the post, and in our 6P external podcasts). Use these links to listen: Apple Podcasts, Spotify, Pocket Casts, Overcast.fm, YouTube Podcast, or YouTube Music.

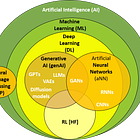

Note: In this article series, “AI” means artificial intelligence and spans classical statistical methods, data analytics, machine learning, generative AI, and other non-generative AI. See this Glossary and “AI Fundamentals #01: What is Artificial Intelligence?” for reference.

Interview

I’m delighted to welcome Real Technologist and software architect Tracy Bannon as our next guest for “AI, Software, and Wetware”. Trac, thank you so much for joining me today! Please tell us about yourself, who you are, and what you do.

Trac Bannon. I am a software architect, engineer, and researcher with the MITRE Corporation. I've been there coming up on five years. They are a federally funded research and development organization. That means that Congress chartered them as a way to provide more technologists, more scientists to the federal government that could be truly objective, right? Industry can't always be completely objective because their goal is to make money.

So it's a pretty cool role, a pretty cool place to be. What do I do? I not only research, I build things. I community-build with others like you. So tons of stuff, tons of stuff.

Sounds like a lot of fun!

What's your experience with AI and machine learning and analytics? I assume you've used it professionally, and maybe personally, and studied the technology?

All of the above. And I have to say, first of all, that I am not a data scientist. I don't ever hope to be a data scientist, but data science adjacent. Because I need to understand two different parts of this.

As a software architect and an engineer, I need to be able to understand AI for software engineering. I also need to be able to understand software engineering for AI.

The difference between those is that I need to look at all of those algorithms, all of those techniques, all of those capabilities, and understand how they impact the software development lifecycle, from vision to fielded operations. So that's a really big important part of what I do.

And the second part of it, and understanding and the research and the hands-on, is understanding the patterns and the practices that are necessary to implement those technologies the data scientists invent that they put into place, that I can help them to get those out into a production environment for end users.

So you can see there's a dividing line. I'm either using it to create software, or I'm using my different techniques to provide software out.

I do use it personally. I use many different types of generative AI. All of us in one way or another are using different machine learning algorithms. Those have been maturing for, gosh, decades now, and they're embedded day in and day out. We don't even realize that we're using them. So yep, just like everybody else. I try to make sure that I'm being effective and efficient, and give myself a little time box so that I don't spend an entire evening going down the rabbit hole with the generative AI.

That makes total sense.

Can you share a specific story with us on how you have used AI or machine learning? I'd like to hear your thoughts about how well the AI features of the tools worked for you or didn't, and what went well, and what didn't go so well.

So I, as I mentioned, spend an awful lot of time experimenting, but also doing scholarly research into how I can use these tools and techniques for the software development lifecycle. And one of the things that I've been working to truly understand is: how good is this? How good is generative AI at helping us to generate assets for the software development?

One of the places that I wanted to debunk first, or maybe say get as much detail as possible, is what's causing all the FOMO right now, the Fear Of Missing Out. And that is the whole world saying that we can just generate, generate our code, we just generate. And the fact of the matter is that it isn't that great.

Generative AI models have been trained on the world's corpus of available data. Guess what that means? It means it's looking at open source. It means it's looking at repositories that are open for anybody to look at. Do you think that the best of the best keep their stuff out in the open? They don't.

So those models have been trained on lesser-quality samples. That means that when you are trying to use it for generating, even calling it code completion, it's not as well baked as it will eventually be. So for right now, it takes more humans in the loop.

It's a fantastic jump-start, though, if I allow it to look at my corpus of information. I let it look at my code base, I let it look at my tests, for example, and I can interact with it. I can chat with my code, so to speak, and ask the GPT to explain something to me. Fantastic for when you are new to a code base, for when you're new to a business or mission domain, or if you're new in your career. So kind of two sides of that:

More senior people: They're getting some real productivity gains, because they already know if that's good or bad code, if that's a good or bad piece of software that's being provided to them.

Less advanced in their career: Not as good for them. It's better for helping them to understand things.

So what went well? Well, it works well when I generate things, because I've been doing this for a couple of decades.

What doesn't go so well? If I am truly messing around with a language that I've never worked with before, like Ada, I don't really have the background to be able to evaluate it and say, there's a better way to do that.

Yeah, that makes total sense. And that's a great insight on who it's good for - I've heard some people refer to generative AI saying that you should treat it like an intern, that you have to supervise very carefully and monitor and check their code and give them feedback and treat them that way.

I've also heard some people say that they feel like the more senior people don't get as much of a bump. And I've experienced this myself. I get to a point, after the first few iterations, saying you know what, from here on, it's faster for me just to code it myself. I know where to go from here. You got me past not knowing this API for this package I haven't used before. I'm good. And so it's interesting to hear the different perspectives on when it's useful and when it's not.

It really depends on what you're trying to do. I find the same thing when I'm writing. So I author a fair amount of things, and I find that I can use it as a muse. I tend to say that, instead of a generative AI model hallucinating, I tend to say that it has a creative streak. So if I think of it from that creative streak perspective, right, think about having somebody going over to the whiteboard with them. And they have these whack-a-mole ideas that are all over the place. And you love it because it makes you think, and then you turn around and walk away. And you shake your head and go, well, 99% of that doesn't matter to me. So high creativity, right, high creativity ratio.

The same can be true when you're using these different generative AI models. But it's also really important that as you talk about this, as I talk about this, that we make sure folks know that there's more to AI than generative AI. We've been doing this for a long, long, long time.

As a matter of fact, a couple of us - Debbie Brey, Bill Bensing, Hasan Yasar, Robin Yeman, Dr. Suzette Johnson - we just wrote a paper together that got published by IT Revolution1. And it has to do with applying AI to digital twins. So not generative AI, but applying machine learning, applying these deterministic algorithms that can really be a help.

So it's just really important that we are very clear, consistently, because the whole world's not fair right now. You say AI, and everybody's out there making recipes and chicken jokes with ChatGPT.

Right. And the other thing that we hear sometimes is when people talk about AI, they're referring only to generative AI, or they're referring to AGI, which - we're nowhere near there yet. In so many cases, though, we've had AI for years before we even came along with generative AI. And so when we talk about AI, that's one thing that I would say, well, what exactly do you mean? And that's a question that would be really good for people just to learn to ask when they read something about AI. What AI do you mean? And just getting that context before reading on and drawing conclusions.

You're absolutely right. You are absolutely right, Karen.

Are there any things that you've avoided using AI based tools for? And if so, you can you share an example of when and why you chose not to use AI?

So I have a podcast called Real Technologists, and it's a little bit different than just a challenge and response. I'm having a conversation. It is truly me investing an hour to an hour and a half with somebody else. And we have a conversation and we report. Now, from that, I take a step back and I write this script myself using what's called narrative style. That means that you don't listen to our conversation. What you listen to is my take on this person's choices that they've made throughout their life. Real Technologists is about how people navigated to get to where they are - their origin stories and their journeys.

It's really important to me that it's by my hand. It's REALLY important to me that it's by my hand. So the most that I will use generative AI to help me with is to make a chronology and put the bullet points of a chronology. But I write those scripts. And you'll see me put on some of my posts against these things "by my own hand". Because I want people to know that that creativity, that was mine. That yeah, help making a bullet list, pshh, it doesn't matter if it's GPT or if it's my son or my daughter. That doesn't matter. So I really try to avoid those things that I want to be truly sincere and truly authentic.

Yeah, that's a great perspective on it. It's always interesting to hear why people choose not to use it. And I don't use it for any of my writing, really. Because what I found is that the process of writing helps me think, and why would I outsource my thinking? The whole point of this is to help me form my thoughts and get them onto digital paper, get them out there, and be able to have conversations. So I don't see any advantage to short-cutting that. And that's aside from any ethical considerations about where the data comes from. But we'll get to that later.

Well, and also, depending on your writing style, it can also help you to know what you don't want to write. So I find that if I ask for a summary or create a post of some sort, I look at it, and 9 times out of 10 - well, actually 10 times out of 10 - I look at it and say, no, I wouldn't write that way. Or, those are not the key points that matter to me. Sometimes it helps me to avoid it being mundane, avoid my own writing. Kind of an inverse muse, right? You learn as much from a bad example, as you can from a good example. So I would say that sometimes I use it that way.

That's a great point. I hadn't thought about that. I may have to try that.

So as I mentioned, one common and growing concern nowadays, and I know you're very much aware of this, is where do AI and machine learning systems get the data and the content that they train on? They often use data that users have put into online systems or publish online. And companies aren't always transparent about how they intend to use our data when we sign up.

How do you feel about companies using data and content for training their AI and machine learning systems and tools? Specifically, should ethical AI tool companies get consent from and compensate the people whose data they want to use for training?

I think this is a really difficult question, because I can tell you what I want in my heart of hearts, and then I can tell you what the pragmatist believes.

In my heart of hearts: if I write something, I own it. If I put it out on the web, if I have it annotated that I don't want it to be copied, then it's kind of up for grabs. Now, what I'd like is if I write it, I own it. If it's about me, I own it.

But that's not the reality of the platforms that we agreed to. The difference is that we've clicked through paying into them. I would strongly recommend folks to go out and take a listen to a podcast called "That's in my EULA?" 2 It was made by a guy named Mark Miller - Karen, you know Mark Miller. And it's really cool. I think he did about a dozen episodes - met with a lawyer, and they went through a EULA. And you'd be surprised what you're agreeing to.

So the pragmatist says: we've already signed away some things. I think that we need pullback from how much we're giving away. Think about just how hard it is to protect your valuables already, how hard it is to protect your passwords, and your social security number, and all of those things. And now, if I tag my son on Facebook, I have to be wondering who's using that, and social engineering, and the other pieces that are going with it.

We, and I mean this is the global we, have a lot to do really quickly, to figure out very specifically the types of data that can and cannot be used. The EU is in a good place right now. But I think even their rules are not specific enough and tight enough.

I tend to be more of a libertarian, just by my own standings - small amount of government, least amount of government necessary, allowing people to have the most amount of freedom in what they're doing. And this is one of those times where I believe we need to have stronger legislation. I can roll back a whole bunch, a dozen, programs that I have on the top of my cut list. But I believe we need to double down on this aspect of data protections. Who owns the IP? Is it my IP? Do I have to make sure that I go back through blogs that I wrote a decade ago, and I'm adding tags on them, so they don't pull them in and suck them in and I don't get credit?

There's a lot for us here. There's a lot for us to take care of right now, a lot for us to noodle on.

Yeah, data sourcing is something that's really getting increasing visibility. I think that's a good thing, in looking at the transparency.

So when you've worked with building an AI based tool or system, is there anything that you can share about where the data came from and how it was obtained?

So in general, any data that I've had the ability to work with, I do not do the first line model training, right? So foundational models - not a part of training that at all.

Even with fine tuning, which is pretty hardcore, but getting into what are called the embeddings and retrieval augmentation, and using the smaller corpus of data to put guardrails around what happens with that language model. Those are private data coming from those private entities. It's not coming from the public source. Because the majority of what I do is supporting the federal government, her allies, and on behalf of the public good. So it's very private and it's held in private. Those models, or should I say that augmentation, those aren't public.

I understand that. You hear a lot about the scrapers and how some of the fundamental models have been trained with, as you said, just what's out there and it may not be the best of the best. And in some cases, though, they are picking up really high quality creations. And it's a definite concern.

Another aspect that I wanted to talk to you about: as members of the public, there are cases where our personal data or content may have been used, or has been used, by an AI based tool or system. Some examples might be online test taking tools, or the TSA biometrics and photo screenings at the airport, which a lot of people don't know you actually can opt out of that.

Have you seen anybody opt out yet?

I have not. But I haven't been at airports lately.

Okay.

That's an example. Or you sign up for a website and they ask for an exact birth date when all they really need to know is if you're over 18 or not. Different cases like that, where different sites, or different services that we may *have* to use are collecting our information and sharing it.

I was just reading earlier today about cars and the data that the infotainment systems collect when you've connected up your phone to the cars. And that data is going all kinds of places. So it's really hard to avoid our data being used and sold and transferred. It's really pervasive.

It is. You can take some steps. When you buy a car and you need to understand what you're agreeing to? When you're buying a new system, what are you agreeing to? When a site comes up - and it's getting taxing now, but it'll ask you - will you accept all the cookies? Or will you accept all these things?

I'm the person who goes ‘Reject All’! Or customize them, and say the only thing that you can have is ‘Strictly Necessary’. However, right now we're under observation, we're under surveillance, all the time. And I don't mean by the government. I just mean by all of these things.

We have to be cognizant about it. I actually believe that there's a lot of merit in reducing our digital footprints - personally reducing. I stopped using Facebook in 2019. Part of it was changing jobs, but part of it was realizing how much that data was getting pulled and getting used. And I had noticed that my face was getting tagged on pictures, and they weren't pictures that I took. They're pictures that other people had. So those kind of algorithms, they've been running for a bit.

A lot of people will say, hey, nothing to hide. I don't think that that is a fair approach anymore. That may have been an okay approach 25 years ago, when the most that you've had was somebody walking by your office and you closed the door because you didn't want to hear them on your analog phone. Even that was not a cell phone, but it was not digital in the same way that we're talking about now.

It's much more difficult - it's MUCH more difficult - to be anonymous right now, really exceptionally difficult. Should you put your phone in a Faraday bag3, right? Should you make sure that you're being blocked, so that nobody knows where you're at? Because even when your phone is off, you can still tell where a phone is at. There are, what, 21 sensors on a phone, something like that?

We should all be thinking about, where's my data going? When I sign into this, when I do this, when I put my finger on something, when I swipe over it, when I enter in a PIN code - any time I'm ever doing anything, the security part of me says, where's that going? And did those people secure it?

Do I believe, based on the interface that I'm seeing, based on the presentation, that cheap kiosk? Should I really be putting my information into that cheap kiosk? Mmm, yeah, it looks pretty sketchy - I don't think I'm going to do that. So … making decisions along that line.

Yeah, I had an old blog post about going to a home show4, and they have these kiosks where you can sign up to win something. And they ask for some really invasive info. Like, okay, you really need to know my income bracket to decide whether or not I get to win a new deck? It was just SO much overreach.

I'm like you, I do the ‘Reject All’, and I'm the fine print reader in our family. I read all the terms and conditions. And I read through those at the home show and said, Nope, nope, not doing this.

Yup!

Same thing when we got an Alexa a few years ago as a gift, and read through that and said, no, it's going back in the box.

We just went through a de-Alexa-ing of my house. For a while, I had set this up for my parents originally to help them. They had a ranch house with a Florida room. I call it a Florida room - a screened-in beautiful four-season room. That's where they would spend the majority of their days, when they weren't in the kitchen. When they go to bed at night, they'd go the whole way through the house, they get the whole way down to the other end. And my dad, with the bum knee, would forget to turn off the light.

So it started off innocently that I got him a single Dot. I got him a single bulb. And he could turn off the lights. Well, then I showed him how to play his music. And then my mom loved it. And we started to proliferate it in their house.

And I thought, you know what, they love it so much, and I could, if I get one, I can drop in on them. So it started to be a communication mechanism. So I had Dots, I have the Shows, I have - well, everything.

And then I noticed the amount of private information that was popping up on screens that had nothing to do with this. So I'm having a conversation in my living room with my son. And next thing I know, I go to get on my phone and I open Chrome instead of Safari. And why did they know that I want that pair of shoes? I didn't search for that online - never had that conversation. So a lot of people will tell those stories.

And they're always on. They're ALWAYS ON. Yes, there's a wake-up word. But that doesn't mean it's turning on. It means it had to listen to you. It's no different than you have somebody sitting beside you. And they're reading a book, but they're listening to everything. And they're supposed to pick their head up when you say Alexa, or you say whatever you call your particular tool.

So we have just gone through and de-Alexa'd our house.

Which leads me to what's really my last question, and I'd love to hear your thoughts on this. Public distrust of AI and tech companies has been growing, for understandable reasons. What do YOU think is THE most important thing that AI companies need to do to earn and keep your trust? And do you have specific ideas on how they can do that?

There's going to have to be a lot of transparency. We're going to have to have publishable school standards, publicly available attestations. And we're going to have to be auditable. I'm not going to believe a mega corporation or even a small company. Are you suddenly going to believe that big pharma loves you, Karen, and is going to do everything that's good on your behalf? Do you suddenly believe that a car manufacturer is giving you the best deal possible, because they're altruistic and they love you? It's no different with the companies that are grabbing your data.

Does it make them evil? I'm not going to go that far. They're businesses; we live in a democratic republic. And we thrive because of capitalism. Our innovation thrives because of capitalism. Sometimes, like now, we need to clip the wings, and they're going to have to agree to having their wings clipped. And they're going to have to be able to produce those assets.

Yeah, transparency is something I hear a lot from people - that this is really resonating - that we can't just trust them. We do need to understand what they're doing, and give not just consent, but informed consent - which is a lot harder when the EULA is 20 or 50 pages long, right?

Absolutely. Absolutely. Right. Well, thank you for having me today.

Is there anything else that you'd like to share with our audience?

I will be doing a keynote at DevOps Days Dallas5. I'll be speaking at All Day DevOps6 as well, on the same kinds of topics. I'll be talking about how we can leverage generative AI appropriately. What are the gotchas, and what are some opportunities, so that people are going into it eyes wide open.

And folks can always hit up my own website - it's TracyBannon.tech. And they can keep on top of some of the blogs that I put out there. I slow roll this because we got a whole lot of information coming into us right now. So they can always get in touch and we can go from there.

And you mentioned your podcast as well, right?

Yep. It is real technologists dot org.

All right. We'll include that link as well then!

Thank you.

Trac, thank you so much for joining our interview series. It's been really great learning about what you've been doing with artificial intelligence. And what you choose to use your human intelligence for and how you make it work. So thank you very much!

You're welcome. It's been really fun to talk with you tonight, Karen.

References

All Day DevOps is Oct. 10, 2024 and it’s FREE, so if you’re interested in DevOps or AI, be sure to register to hear Trac and others speak!

Trac’s website: TracyBannon.tech

Trac’s podcast: RealTechnologists.org

See the End Notes of this article for links to Trac’s article on AI-Enabled Digital Twins, Mark Miller’s podcast, and my 2019 home show post.

About this interview series and newsletter

This post is part of our 2024 interview series on “AI, Software, and Wetware”. It showcases how real people around the world are using their wetware (brains and human intelligence) with AI-based software tools or being affected by AI.

And we’re all being affected by AI nowadays in our daily lives, perhaps more than we realize. For some examples, see post “But I don’t use AI”!

We want to hear from a diverse pool of people worldwide in a variety of roles. If you’re interested in being a featured interview guest (anonymous or with credit), please get in touch!

6 'P's in AI Pods is a 100% reader-supported publication. All new posts are FREE to read (and listen to). To automatically receive new 6P posts and support our work, consider becoming a subscriber (free)! (Want to subscribe to only the People section for these interviews? Here’s how to manage sections.)

Enjoyed this interview? Great! Voluntary donations via paid subscriptions are cool, one-time tips are appreciated, and shares/hearts/comments/restacks are awesome 😊

Credits and Additional References

Audio Sound Effect from Pixabay

End Notes

“AI-Enabled Digital Twins”, IT Revolution, Enterprise Technology Leadership Journal Fall 2024 | AI-Enablement for Digital Twins, 42 pp. By Tracy Bannon, Bill Bensing, Debbie Brey, Suzette Johnson, Rosalind Radcliffe, Hasan Yasar, Robin Yeman.

Mark Miller’s podcast (“That’s in my EULA?”): https://eula.thesourcednetwork.com

“Data privacy at the home show”, Agile Analytics and Beyond, 2019-02-17

DevOps Days Dallas, Oct. 9-10, 2024

Main conference page: https://devopsdays.org/events/2024-dallas/welcome/

Trac’s speaker page: https://devopsdays.org/events/2024-dallas/speakers/tracy-bannon/

All Day DevOps Conference, https://www.alldaydevops.com, Oct. 10, 2024