AISW #024: Anonymous6, CR-based embedded software developer 📜 (AI, Software, & Wetware interview)

An interview with an anonymous Costa Rica-based embedded software developer on their stories of using AI and how they feel about how AI is using people's data and content

Introduction

This post is part of our 6P interview series on “AI, Software, and Wetware”. Our guests share their experiences with using AI, and how they feel about AI using their data and content.

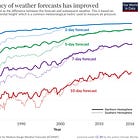

Note: In this article series, “AI” means artificial intelligence and spans classical statistical methods, data analytics, machine learning, generative AI, and other non-generative AI. See this Glossary and “AI Fundamentals #01: What is Artificial Intelligence?” for reference.

📜 Interview - Anonymous6

Today’s guest on “AI, Software, and Wetware” has chosen to be anonymous.

Thank you so much for joining me today! Please tell us about yourself, who you are, and what you do.

I’m a 25 year old software developer based in Costa Rica. I mostly work in low-level software for embedded devices.

Thank you for sharing that background. You’re the first embedded developer I’ve interviewed for this series!

What is your experience with AI, ML, and analytics? Have you used it professionally or personally, or studied the technology?

I’d say I have a medium level of experience with AI/ML. I’ve used it both professionally and personally, I’ve also built systems implementing it both in academic and personal projects.

How about personal experience with AI / ML? Do you use any social media or streaming services? They use lots of machine learning.

I use LinkedIn, Facebook, Instagram, and YouTube.

Can you share a specific story on how you have used tools with AI or ML features? What are your thoughts on how well the AI features of those tools worked for you, or didn’t? What went well and what didn’t go so well?

I once had to prepare for a presentation at work. I used ChatGPT to generate a document with all of the topics and information I wanted to mention in the presentation. Then I used another tool (I don’t remember its name) to generate slides with images and text summarizing the previously generated document.

It didn’t turn out that bad. I had to spend some time in the document generated by ChatGPT since it lacked that “story telling” you usually want to have in presentations, even thought I explicitly prompted it. It also took me several iterations to get to a decent-enough version of the document to be satisfied with ChatGPT’s output.

About the tool for generating the slides, that’s where I really had to spend most of my time. It lacked the capability to prompt it what you wanted for your slides, and instead you only provided it with a piece of text and specified how many slides you wanted to get out of it (plus other parameters like the number of images per slides, amount of text, among others). The generated slides were not at all what I expected. There were many slides focusing on irrelevant parts of the text, and most of them missed the main ideas of the document. About the images, I could say 1 out of every 5 were good enough for me.

Personally, I wouldn’t use any of these tools again. I initially thought I was going to end up saving some time compared to just writing the document and creating the slides myself, but I really ended up spending roughly the same amount of time.

I’ve been seeing conflicting studies on whether it really improves people’s productivity or not. One out of five images isn’t a great success rate. It’s interesting to hear that you feel that the AI tools didn’t save you any time for this kind of work. One of my other guests expressed interest in trying a combination of ChatGPT and Canva to make slides, but she hadn’t used it yet.

If you have avoided using AI-based tools for some things (or anything), can you share an example of when, and why you chose not to use AI?

One of the things I avoid using generative AI tools such as ChatGPT for is code generation, especially for complex code in the low-level domain.

I remember some time ago when I was trying to come up with a macro to statically register entries in a table at build time.

Long story short, I ended up wasting too much time prompting ChatGPT what I needed it to generate. Most of the time it generated solutions not doing at all what I needed them to do, or claiming it wasn’t possible to generate such a solution.

After some hours searching for a solution in different forums, I was able to find and implement what I needed.

That’s a good observation. Tools like ChatGPT won’t be better at generating code than the code they were trained on. I’d expect that most of the really good embedded code, and maybe also some build and DevOps code, is going to be company proprietary and protected — not available on the public internet where it could be scraped for AI training.

A common and growing concern nowadays is where AI/ML systems get the data and content they train on. They often use data that users put into online systems or publish online. And companies are not always transparent about how they intend to use our data when we sign up.

How do you feel about companies using data and content for training their AI and ML systems and tools? Should ethical AI tool companies get consent from (and compensate) people whose data they want to use for training?

Yes absolutely, companies should get user’s consent and explicitly let the users know what they plan to use their data for, and depending on the case compensate the users. Also, companies offering AI tools, in my opinion, should disclose what data they used to train their systems and where they got that data from and how.

When you’ve USED AI-based tools, do you as a user know where the data used for the AI models came from, and whether the original creators of the data consented to its use? (Not all tool providers are transparent about sharing this info)

No, I don’t think they’ve been transparent about where they got the data from, who they got it from and how.

If you’ve worked with BUILDING an AI-based tool or system, what can you share about where the data came from and how it was obtained?

In my case, the data was generated. It was obtained using specialized equipment (cameras and sensors) and the customer’s assets.

That sounds fair. It was probably a lot of work to create that data.

As members of the public, there are cases where our personal data or content may have been used, or has been used, by an AI-based tool or system. For instance, how do you feel about use of your data in your cell phone and its apps?

That's another controversial topic. I definitely feel my information is being used without my consent. Ads are a simple example. IDK if it has ever happened to you, but it seems like our phones are always listening to our conversations. It's not uncommon you're talking with someone about a particular topic, and then you start seeing ads related to that conversation minutes after that.

Another example I recently noticed is AliExpress. Stuff I've searched for in the AliExpress app started appearing in Facebook's ads. All these large social media companies are definitely using and selling lots of our data, with us not even noticing. We don't even know what security policies these companies have in place to protect our data. It's concerning to know your data might get exposed or compromised by one of these companies.

Yes, I actually just wrote an article about our phones listening to us!

Do you know of any company you gave your data or content to that made you aware that they might use your info for training AI/ML?

No, I’m not.

Have you ever been surprised by finding out they were using it for AI? If so, did you feel like you had a real choice about opting out, or about declining the changed T&Cs?

No, I’ve not, at least not yet.

You mentioned using Facebook and Instagram. There was a lot of concern this summer about them using our data and photos and other content for training their AI models. They did not exactly go out of their way to notify us, so you may have missed hearing about it.

And this month [September] there was an uproar over how LinkedIn set all of us (at least, all of us who weren’t in the EU and protected by GDPR) to be opted-in by default for training their AI models. You may want to check your privacy settings there.

Has a company’s use of your personal data and content created any specific issues for you, such as privacy or phishing?

No.

That’s good!

Are you ever asked for personal information by local businesses in CR?

Not really, unless you agree being added to their database of existing customers for promotions and offers, you need them to get in contact with you, or you want them to send a bill to your email.

That’s good too - it sounds like you feel you have a choice.

Public distrust of AI and tech companies has been growing. What do you think is THE most important thing that AI companies need to do to earn and keep your trust? Do you have specific ideas on how they can do that?

Transparency. The fact that most companies do not (or don’t want to) reveal how and where they get their data from creates distrust in their users. Starting by just being transparent about what data they have, how they got it, where they got it from and what they plan to use it for is the first step.

Agreed. Requiring transparency by AI and tech companies seems to be a universal sentiment among all of my interview guests.

Thank you so much for joining our interview series. It’s been great learning about what you’re doing with artificial intelligence tools, how you decide when to use human intelligence for some things, and how you feel about use of your data!

Interview Reference Links

About this interview series and newsletter

This post is part of our 2024 interview series on “AI, Software, and Wetware”. It showcases how real people around the world are using their wetware (brains and human intelligence) with AI-based software tools or being affected by AI.

And we’re all being affected by AI nowadays in our daily lives, perhaps more than we realize. For some examples, see post “But I don’t use AI”!

We want to hear from a diverse pool of people worldwide in a variety of roles. If you’re interested in being a featured interview guest (anonymous or with credit), please get in touch!

6 'P's in AI Pods is a 100% reader-supported publication. All new posts are FREE to read (and listen to). To automatically receive new 6P posts and support our work, consider becoming a subscriber (free)! (Want to subscribe to only the People section for these interviews? Here’s how to manage sections.)

Enjoyed this interview? Great! Voluntary donations via paid subscriptions are cool, one-time tips are appreciated, and shares/hearts/comments/restacks are awesome 😊

Credits and References

Microphone photo by Michal Czyz on Unsplash (contact Michal Czyz on LinkedIn)

A few years ago I bought the DVD of Prof Iain Stewarts's "Rise of the Continents" https://www.bbc.co.uk/programmes/p01993gc

For the next two months Google served me ads for incontinence products. (Could do with a bit more "I" in the AI, if such it was.)