Generative AI and gender diversity

How hallucinations in generative AI, specifically large language models, can potentially cause harmful misinformation for LGBTQAI+ folks

Hi folks, and happy Pride Month! Today’s post is a guest article by Autumn Chadwick on the important topic of how experimental or improperly-trained generative AI (genAI) features can adversely affect LGBTQIA+ people.

GenAI Hallucinations

Many folks probably have seen recent stories around glaring mistakes made by generative AI tools. Examples:

Google’s new AI search assistant in its AI Overview package providing answers to “How many rocks should a person eat a day?” 1 2

Pictures from AI image generation having one too many arms or fingers.

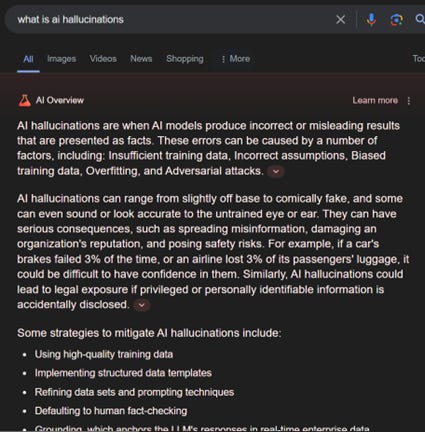

LLMs and other machine learning algorithms sometimes run into the problem of what’s called “hallucination”. The short answer for what a hallucination is: it’s the appearance of something that shouldn’t normally be there, or is wrong or incomplete in a misleading way.

That said, it’s also really funny and ironic to ask on Google search “What is AI hallucinations” and have its own AI assistant return an answer:

GenAI and LGBTQIA+

So this narrative is out there about AI hallucinations as an issue that plagues AI Search engines. What could this mean for the spread of misinformation specifically around marginalized communities?

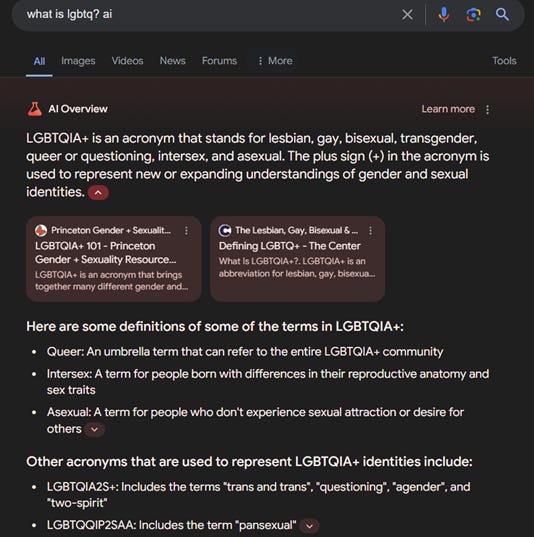

Let’s start with a simple query: “What is LGBTQ? AI” (I had to add the AI tag to it to get Google’s search engine to actually display the AI agent, since “What is LGBTQ?” did not display on a first attempt for me.)

When I read through the above readout, a few parts that stand out are:

1) Asexual is partly correct (no to very little sexual attraction, depending on where you are on that spectrum). But it also feels like it’s adding in “aromantic” to the definition with no desire for others at all.

2) When I read the other acronyms for LGBTQAI2S, I do enjoy “trans and trans”.

3) It is also interesting to notice what is highlighted as callouts from the AI assistant as they aren’t a formal list of all the parts of the acronym. For example, LGBTQQIP2SAA includes the term “pansexual”, but skips “androgynous” as a term not added to the summary.

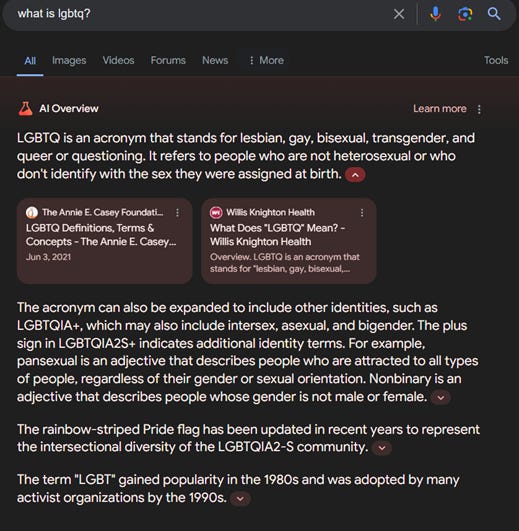

After getting the first search to work, I did actually manage to get it to work on a second try with “What is LGBTQ?”

Impact of GenAI Hallucinations on LGBTQIA+ Folks

As we see above, AI isn’t perfect. Let’s consider someone who is either trying to find information or figure out themselves if they might be questioning. What if this person is given misleading or factually inaccurate information? Could this shape someone's perception against the LGBTQIA+ community or reinforce harmful ideology and stereotypes? For the person who’s questioning, what if this leads to them hiding who they are for longer, or further reinforces sentiment that society will not allow them to be themselves?

What about a parent who is confused when their child tells them they don’t identify with their birth sex, and now the parent wants to figure out how to help with the distress the child is feeling and is looking for answers? Is the parent being directed to sources which will actually help their child, or told conversion camps are a way to solve this “issue”?

Remember also, these blips of hallucinations are based around the training data that is ingested.

What if a model is overly trained with anti-LGBTQ+ sentiment topics?

What if the only ‘truth’ known to an AI model wasn’t fact-checked, or fails to consider intersectionality?

What You Can Do

Currently, there is only a small warning attached on Google’s assistant that this is an experimental feature. But even it doesn't directly advise to be cautious, or that its information may be misleading. Data moderation and scrutiny of AI results are critical to filter out bias and to make sure the “truths” that come out are actually trustworthy and correct.

As an alternative to generative AI results from ChatGPT or Google’s assistant searches, I recommend the following links for accurate, trustworthy basic information about the LGBTQIA+ community:

Cheers folks and until next time,

Autumn

Karen here. Misinformation about LGBTQIA+ facts clearly isn’t the only way in which generative AI hallucinations could have adverse effects on folks. Autumn will be writing more about this in future guest posts. But understanding what “LGBTQIA+” means is an important first step. Getting it right matters! Thank you, Autumn, for sharing these insights.

Do you have any experiences with genAI providing biased results which might adversely impact LGBTQIA+ people?

Or do you know of any genAI tool providers who are taking good precautions to prevent biases harmful to marginalized communities?

Please share!

References:

“Glue pizza and eat rocks: Google AI search errors go viral”, by Liv McMahon and Zoe Kleinman / BBC News, 2024-05-22

“Everything We Know About Google’s AI Overviews & AI Organized SERPs in Search Announced at Google I/O 2024”, by Joe Youngblood, 2024-05-15