Introduction -

This post is part of our AI6P interview series on “AI, Software, and Wetware”. Our guests share their experiences with using AI, and how they feel about AI using their data and content.

This interview is available as an audio recording (embedded here in the post, and later in our AI6P external podcasts). This post includes the full, human-edited transcript.

Note: In this article series, “AI” means artificial intelligence and spans classical statistical methods, data analytics, machine learning, generative AI, and other non-generative AI. See this Glossary and “AI Fundamentals #01: What is Artificial Intelligence?” for reference.

Interview -

Karen: I am delighted to welcome Rebecca Mbaya from the Democratic Republic of the Congo, currently living in South Africa, for “AI, Software, and Wetware” today. Rebecca, thank you so much for joining me on this interview! Please tell us about yourself, who you are, and what you do.

Rebecca: Thank you so much, Karen, for having me here. It's actually my first time as a guest on a podcast, and this is very exciting. And I'm delighted to be here and contribute to this meaningful conversation.

My name is Rebecca Mbaya, like you said. And I am originally from the DRC, Congo Kinshasa. But I've been living in South Africa for over a decade now. I moved here to pursue my university studies. After obtaining my master's degree in international relations, I went on to the business school for a postgraduate diploma in management and business administration.

At first, I thought I was going to pursue an academic career. That was the plan. But after my master's, the idea didn't feel as exciting anymore. Deep down, I knew I wanted to explore something different, something that felt more dynamic. So I went to the business school, and that's actually where I discovered I had a strong business acumen alongside my more artistic side -- you know, the kind of talent that you don't necessarily discover through formal education.

Professionally, I sort of stumbled into monitoring and evaluation, or M and E. During my business school program, we had to complete a two-month internship to meet the graduation requirements. And I ended up interning at a company that was, and still is, deeply involved in improving the data culture in South African schools.

So I joined the advisory team as an intern, and after I graduated, then they brought me on as an M&E researcher. Since then, my work has mostly focused on M&E, particularly in the international development and education sectors. That's really where I've built my expertise, at the intersection of data learning and social impact.

Right now I'm working independently, flying solo, so you could say. And I recently started a Substack newsletter called “Breaking Down Walls and Building Bridges”. It's a space where I explore the intersection of AI, data, technology, and society, but with a focus on centering African voices and grounding these big, often abstract topics in real human stories.

A lot of my writing is shaped by questions around power, who holds it, who gets left out, and how often technology either reinforces or challenges those dynamics. I'm especially interested in how AI is being developed and deployed in ways that impact underserved communities, often without their inputs or even awareness.

Coming from the DRC, which is a place that's often overlooked in global tech conversations, and navigating this space as a Black African woman, I carry a deep awareness of how narratives are shaped and whose stories get told. That awareness is part of what drives me. I want to make sure that conversations about AI and our digital future don't just happen around us, but actually include us, especially those of us who historically have been excluded from the decision making, yet often carried the biggest burdens of those decisions.

Karen: Yeah, that's such an important perspective that I think we've been missing hearing, from a lot of the conversations around AI in particular and just the world in general. So I'm really happy that you're here and talking about that. One quick question: you mentioned, I think, M and E. What's “M and E”?

Rebecca: Oh, it's a very good question, Karen, because I often find myself having to explain that to the people around me who don't know what that is. And my family, my mom, often ask me, “What is that?” And I have to break it down in a way that is understandable. So I often use the analogy of a vehicle, right, to explain both aspects: monitoring, which is M, and then evaluation, which is the E.

In my example then I'll give, the vehicle will be a program designed to move from point A to point B. That will be in the development sector where you have an organization wanting, for instance, to improve the financial literacy in some local communities in African countries. I'll use Africa as the place.

And then, monitoring, in this case, it will be the dashboard in the vehicle that allows us to track the progress in real time. So it's showing us real time data. As we move, we can clearly see the engine light. We can keep an eye on fluid levels. We see the speed at which we're moving. We are informed of the progress and performance of the car in real time. So it's an ongoing process.

But then you have evaluation, which is not an ongoing process. It happens periodically. So in our vehicle case, then you have evaluation as the mechanic and road trip review, if I can say that way. So we would stop at key points. This also includes the endpoint of the long trip to assess how the trip went. So it'll be the periodic deep assessment, whereby midterm evaluation, for instance, diagnosis, why the engine is overheating, bottlenecks. And then, are we still on the right path? At this point we can adjust and fix issues before the trip comes to an end.

And then at the end point, we do a journey inspection, a final evaluation, and an impact check to assess if we reached point B and what changed. We do an efficiency review to assess if the route saved us time, and was this even the best car to use for our journey? We take notes and then we learn our lessons and perhaps it is best that next time we avoid mountainous roads.

So I usually use this analogy to explain what M and E is. I hope that was clear.

Karen: Yes. That's a good explanation. And I like that analogy for it. So tell us a little bit about your level of experience with AI and machine learning and analytics-- whether you've used it professionally, personally, or if you studied the technology?

Rebecca: So, I don't come from a traditional technical background in AI or machine learning. I haven't built models or written complex algorithms. But my experience with the technologies is really grounded in the application, the impact and the broader social questions they raise. Like I mentioned, my professional experience within the development space gave me a strong understanding of how data shapes real world decisions, especially in areas like education and governance and community development.

My interest in AI really started to evolve beyond just data and development. It became about understanding how these technologies shape society, especially for communities like mine that don't often get a seat at the table.

So back in 2022, I co-founded a venture called Congo Excel Technologies. It started from this realization that in many Congolese communities, conversations about AI were either clouded by skepticism or inflated by hype. So I wanted to help the communities cut through the noise so they could make informed decisions about emerging tech in general, but AI in particular -- not just from a user perspective, but also as future builders and leaders in that space.

We ran a mix of online and in-person workshops and trainings, really trying to make the conversation accessible. Then in 2024, we worked with our first client, if I can say it that way, yeah? That was a law firm that was interested in applying AI to streamline their processes, their operations.

So I led a small team of two developers, and we built an AI-powered chatbot that was tailored to the context of the DRC legal frameworks. And that experience really taught me so much, not just technically, but about how complex it is to localize AI responsibly. Everything from ethical concerns to infrastructure limits, cultural context, data gaps. It was all there.

And even though I'm no longer with CET, that mission of making AI work for our reality still drives me, whether through my newsletter, the knowledge hub that I'm building, or smaller projects, I'm trying to hold space for more inclusive, grounded tech ecosystems in Africa.

And technically I've picked up some skills in Python, JavaScript — those were really DIYs — and front end web development. Not because I want to become a full-time developer, but to better understand the system I engage with. I'm also learning more about AI and machine learning, not for the sake of jumping on a trend, but because I believe that if I want to contribute meaningfully, I need to understand both the critic and the construction.

Eventually, I would love to pursue another master's and possibly a PhD, something that sits right at the intersection of social impact, tech, and policy. I'm not quite sure what the exact path looks like yet, but I know I want to help build alternatives, not just highlight what's broken. I want to contribute to building better systems that reflect the realities of all people, not just a few privileged ones.

Karen: Yeah, absolutely. That is so essential, and you're contributing a viewpoint here that very much needs to be amplified. So I'm happy that you're here.

Can you share a specific story on how you've used a tool that included AI or machine learning features? I'd like to hear your thoughts about how the AI features of those tools work for you or didn't? What went well and what didn't go so well?

Rebecca: I think there's two sides to this. The first example I can think of would be the story that I talked about with the AI-powered chatbot that we did for the firm. It was designed specifically for internal use to help the team quickly access and cross-reference legal information. That was my first time really being involved on the technical side of the tools, of how all these puzzles come together. So we pulled from a mix of sources. And so the tool could give responses that were actually relevant to their work. The developers built the backend using Django, the front end in Vue.js, and deployed everything on AWS to make sure it was scalable and secure. On the AI side, they use OpenAI language models through LangChain and leaned heavily on advanced prompt engineering and agent based workflows to improve how the chatbot responded. To gather some of the Congolese legal data, which wasn't always easy to access, we used tools like BeautifulSoup to scrape publicly available content. We also benchmarked responses with Perplexity AI, which really helped us fine tune the accuracy.

What went really well was how quickly the internal team of the firm started using it to speed up their research and make legal lookup tasks much easier. Also it created a bit of a mindset shift. They started seeing AI not just as a buzz word, but as something they could actually use in their daily work. That was a big win for me. Especially in the field, that's usually pretty risk averse.

And of course there were the challenges. Like many AI tools, it would generate hallucinations, especially when the prompts were not specific enough. And the experience really opened my eyes to just how important context is when developing AI tools. And I realized that it's not enough for something to work technically, and it has to make sense within the realities of the people using it. So for me, those were big lessons.

And then the other side, so outside of that project, I use tools like ChatGPT and Claude quite a bit for brainstorming, refining my writing, or even just sense checking ideas. Grammarly helps with editing and I use Perplexity and NotebookLM for research. They've made part of my workflow faster and more focused. But I always use a critical lens with those, and I always want to make sure that the output still sounds like me. So I always make sure to bring in my voice, and there's a lot of back and forth with me and the tools most of the time.

And I do explore other tools now and then. But I really try not to chase every shiny tool that comes out there. I'd rather go deep with a few that are aligned with what I want to do.

Karen: Yeah, that sounds really sensible. It's better to get proficient with a tool that you're comfortable with. I'm curious, you mentioned about the adoption of your chatbot by the team. And that's always a good sign if people actually want to use it and start asking about it! You mentioned that when the prompts weren't specific enough, that that tended to lead to more problems and more hallucinations. Is there something that you found, a way to help the people who were using the tool, to be more specific in their prompts?

Rebecca: Definitely, and that's some of the biggest work that we had to do. We were going in trying to be very technical, so we didn't expect to see challenges at the mindset level, right? And I think that was one of the blind spots that we didn't look into. We thought, I mean, it's just a prompt, you're talking to a tool. Clearly you're just using your words. But then I realized that it's all in the prompt also. That's where the biggest problem might actually be, 'cause the tool can be great, the tool can be there. If the prompt is not there, it is not at the level that it should be to get the output that is expected, then we even have a bigger problem.

So we took two kinds of initiative to fix the problem. We try to explain to the team how to go about the prompts. You actually need to be pretty much clear about what you want to ask. There's a certain level of knowledge that you need to have even if you're trying to get an answer from the tool. So as much as they were trying to use it for research, they still had to do a whole lot more of understanding what a prompt is and what it looks like. And I don't just ask a plain question. I need to be as specific as I can to be able to have a very specific answer and output.

But that was just one side. The other side was also, I believe, the tool did just not understand the context sometimes, right? Because Congo has its own laws, has its own legal frameworks, and then sometimes there was just some sort of contradiction between whatever it is that the tool took from a global source and then was trying to bring it into the Congolese context. So we needed to rely much more on the Congolese context.

We ended up doing even more research on finding data about what the Congolese laws are about. It was kind of hard to find the data, 'cause not everything was online. So at some point we even had to go find hard copies and try and scan them. And we ended up doing more work than we thought we would do. But yeah, we tried as much as we could to mitigate for those. But yeah, hopefully, the result that we saw was improvements in how the team was prompting. We tried to hold their hands as much as we could in showing them the direction in which they had to continue.

So at this point, I hope that they are doing better with the prompt. I had left CET, Congo Excel Technologies, while the project was still going on, but at the time I left, they were already making some good progress.

Karen: Yeah, that's a great story. I really like hearing that. I've heard similar sentiments from other guests about that. If you ask it a question in a domain where you don't really know enough yet to be able to judge if ChatGPT is giving you a garbage answer, that's when it's dangerous to use. But if you know enough to be able to ask it questions, or to challenge what it tells you, when it gives you something that's not right, then that's when it's the most productive.

Rebecca: Yeah, definitely.

Karen: That's a good example of how you've used AI-based tools. Can you share if you've avoided using AI-based tools for something, or for anything, and maybe share an example of when, and why you chose not to use AI for that?

Rebecca: Yes. So I have so much to say about this, but I will try to get this short! So I definitely see AI tools as assistants, and that's how I approach them. But with that in mind, there are definitely moments where I choose not to use them at all. For instance, when I'm working on something really personal, like writing about my lived experience or reflections that are culturally or emotionally layered, I just prefer to use my own words, my own thinking. AI can be helpful, but sometimes I feel that it doesn't carry the cultural and emotional weight and context that I'm trying to express in my work. It just felt flat at times. So I've tried.

And then, so the reason why I wouldn't use it is because I gave it a try and I didn't like the outputs. And I was like, “Okay, maybe not here.” And definitely when it comes to data confidentiality and the project that I work on, I'm definitely not using AI for that. And if I'm designing something for a local community, I prefer also not to use AI, because at this point where we're at, it's not reflective of the context that I work in, often.

And I was having a discussion not so long ago with someone from the MERL Tech community, and it was about understanding what is “Made In Africa AI Evaluation”, right? And the evaluation community in Africa is really trying to bring in AI and what does that look like in evaluation that is rooted in an African context? And for me, that's where we keep on struggling because it's just not reflective. Of our ways of knowing, of our ways of evaluating, of our ways of doing. So then trying to use AI in that context can really be contradictory sometimes, most times. And I think at this point where I'm at, when it comes to evaluation and evaluating a program that is being implemented in an African context, I just try as much as I can not to involve AI tools, although there are some AI LLMs that are being developed with the African context in mind. Yeah, let's just see how it goes. But at the moment, I don't think we are there yet.

Karen: Yeah, that's a good overview and I like that example. Thanks for sharing that.

So one concern I wanted to talk to you about is where AI and ML systems get the data and the content that they train on. A lot of times they are using data that we've put into online systems or we've published online. And the companies are not always transparent about how they intend to use our data when we sign up. I'm wondering how you feel about companies using data and content for training their AI and ML systems and tools, and whether ethical AI tool companies should be required to get consent from and credit and compensate the people whose data they want to use for training, or what we call the three Cs rule.

Rebecca: Yeah. Yeah, I really believe that ethical AI development has to start with the three Cs, especially when people's data or digital label is being used to train the systems. The problem is a lot of companies, like you said, they are not transparent about that. And they will say they have informed users through long legalistic terms and conditions, but honestly, most people don't read those. And even when they try, the language is often deliberately confusing. So it becomes less about informed consent and more about legal cover.

So for us in the global south, this reflects a deeper systemic problem of digital and data extraction. I actually talk about this quite a bit in my newsletter. The pattern is familiar. The Global South provides the raw inputs, data, content, label, while the Global North builds the systems, holds the decision making power, and benefits the most. So if companies truly want to build ethical tools, they must move beyond surface-level content, and design systems that allow people to know, understand, choose, and benefit. Anything less reinforces the same extractive systems we've been trying to dismantle.

I believe ethical AI must go beyond ethical performance. It's about respecting people's data, stories, labels, and all. We can't keep talking about innovation while ignoring the invisible foundation it's built on.

Karen: Yeah, absolutely. And you mentioned data content labeling. There have been some stories recently about companies who are exploiting workers in Kenya and other African countries for doing data labeling and data moderation and other, what they call data worker, data enrichment jobs.

Rebecca: Mm-hmm.

Karen: And not treating the employees right, and subjecting them to reviewing lots of terrible things that no one should have to look at for eight hours a day. That's an additional aspect of ethical behavior, beyond just the content, to other aspects of the whole ecosystem of, like you said, where we get the data and who controls the power and builds the systems and benefits from them.

Rebecca: Exactly. And I think there's an entire chain there, right? Like you said, when we're talking about ethical development of this, or ethical AI, we really need to look at the entire spectrum, and not just at the extraction part of the data. But who is behind this? Who are the laborers, who are actually working physically? And what's going into this physically? That also needs to be the center of attention in these discussions.

Karen: Yeah, absolutely. Thanks for sharing that.

As a person who uses AI-based tools, do you feel like the tool providers have been transparent about sharing where the data used for the AI models came from, and whether the original creators of the data consented to its use? Or whether they used ethical labeling practices or any other aspects of ethics?

Rebecca: No, I actually don't believe AI tools providers have been transparent at all. And that lack of openness has created a serious trust gap for me, and I believe for many other people. As someone who actively works with these tools, I often feel that disconnect between their usefulness and the silence surrounding how they are actually built. Not just in terms of training the data, like we said, but across the entire value chain. It's the entire value chain that feels obscured to me. Most companies remain deliberately vague, defaulting to phrases like 'publicly available data' without unpacking what that actually means.

Content that is often deeply contextual and personal gets scraped, abstracted, and then embedded into massive models with zero trace of its origin or creators. And the fundamental questions remain unanswered: who gets credited, who gets compensated, and who stays invisible? So like I mentioned before, it becomes even more concerning when we think about the data from the Global South. Our languages, our stories, our knowledge systems, are being absorbed into models without any recognition of the origin context or even value.

So there is a sense of deja vu here for us, extraction without acknowledgement. It's a familiar pattern dressed in new tech. Some call it digital colonialism. Others call it data colonialism. So it's just another form of colonialism where historical imbalances are rebranded in sleek tech powered formats.

The recent news that Disney is suing Midjourney for allegedly training on copyrighted content is significant, because it could force AI companies to finally open their training books and reveal whether their data was ethically sourced. If successful, I believe the case could fundamentally change how AI labs operate and set a powerful precedent for transparency. But the nuance here for me is that Disney can sue because it has the legal and financial power to do so. Most independent creators, especially those from regions like mine, don't have that kind of financial power. So while this case might set a new industry benchmark, it also reveals a deeper imbalance: who gets to protect their intellectual and cultural property in this new AI era.

And for me, there's another aspect that's really troubling me, which is the erasure of the entire labor chain, because beyond the data content lies the upstream story that predates any code. Before the algorithms, before the dataset, there are people, minerals, labor. The very foundation of these systems is built on extractive practices we rarely talk about in AI conversations, and that silence is dangerous. How the AI tools remain largely excluded from transparency conversations is very disturbing to me. And that upstream part of the supply chain forms the AI material foundation. We often just don't pay attention to it.

And I don't think we can address bias selectively. To talk about bias, we have to look at the entire spectrum of it and really acknowledge that these systems are interconnected. They are interconnected systems of extraction and exploitation, and they must be examined holistically. So the bias is not just in the data, it's in the design -- the distribution, the invisible labor, the hidden costs. Unless the providers are willing to be transparent, not just about the data set, but about the full life cycle of the system, including the materials and the people behind them, then I think it's hard to call any of these ethical, no matter how shiny the outputs may look.

Karen: Yeah, that's absolutely true. And actually, I have a book on ethical AI that I am getting ready to publish. And I would love if you'd have a chance to take a look at it and see if I'm missing anything really important, or right emphasis on it. It's on one of the articles that I had written back in March, with five parts of the ecosystem. It starts with the data centers and how we're using all these important minerals and doing mining and damaging the environment by the way that we make the semiconductors and build the data centers.

And then moving on to, you get the data and whether it's sourced, and then you do the data processing and labeling and enrichment. And looking, so looking at those five different areas. And I feel like there's just not very much awareness of all these different aspects. They all play into bias, or more broadly speaking, the ethics of everything. And I think people just don't think about it. A lot of people don't even know. They just fire up their browser and they use a chatbot.

Rebecca: Yeah.

Karen: And they don't think about what all is behind it, you know?

Rebecca: Yeah. It's very sad. And for me it's just heartbreaking. This month, I was speaking as part of a panel at the gLocal evaluation week. And I was asked to talk about the hidden cost of AI. And the first thing that I said was that a lot of people, like you said, engage with these tools without even understanding what's actually carrying this, right? AI is not weightless. There is a lot of weight that comes with it. The data centers. Like you say, there are minerals, and in my country, in the eastern part of the country, you actually have rebel groups. This might not have to be in the interview because I don't want it to sound political. But you have a lot of child labor happening, child miners, who are actually going into those mines and mining with their own hands, because they're being forced to do that by rebel groups that are being sponsored by these AI and tech companies because the demand for these tools are just a lot.

And it's this very weird cycle where you have the child miner that is powering the AI tool, the AI model that's going to predict the next drought in Kenya, right? And then after that you have an intervention. So I'm talking about the development sector now, an intervention that's going to be developed based on using those AI tools to address the drought in Kenya. And then you have evaluators now who come into the picture. And they now have to assess that intervention using AI tools. And then after that, you have the next AI global summit in Africa that's going to happen and going to praise how AI has helped us be very responsible with climate.

And with every single stage of the cycle, we are erasing that child who paid his childhood or her childhood to make all of this possible. We need to be aware of how complicit we are in this entire cycle, because we are. And the thing is not about making us feel bad. So to the evaluation community, what I had put out there was, I think the very good starting point would be to acknowledge that we are complicit, and then to see how we can move from complicity to accountability. What do I do where I am right now, as an evaluator? The first thing would be to, at the very least, have an AI policy, a personal AI policy, an evaluator AI policy, understanding what is the upstream part of this AI development. Tools, stories. Where do I fit in and what can I do from my very little corner of this space to just be able to be accountable?

For me, that's really a good starting point. We might not be able to change how these tech companies operate, but the awareness of this part of the story and being able to talk about it without feeling uncomfortable, facing the truth, for me is already a good starting point.

Karen: Yeah, absolutely. I couldn't agree with you more. That is super important. You mentioned earlier that you don't want to just talk about problems, you want to talk about solutions and things that we can do. And I think that pragmatic approach is super important as well, because if all we do is talk, and we don't do anything, then we haven't solved any problems.

Rebecca: Mm-hmm. Definitely.

Karen: Yeah. Absolutely. So I want to focus for a little bit about the way that AI has affected you in your personal life. As consumers or as members of the public, our personal data and content has probably been used by an AI based tool or system. If we're on LinkedIn, we know that they're using it. There are a lot of other situations. Do you know of any cases where your personal information has been used, and maybe without you being aware at first that it was going to be used?

Rebecca: Yes. I would say that I've experienced this, though not always through tools explicitly labeled as AI based. I remember applying for jobs online, only to later receive cold calls or emails from unrelated companies offering services I never consented to. Somewhere along the line, my data had clearly been scraped, shared, or sold without transparency or permission. And this was well before AI tools became commonplace.

That's part of why I think it's important to frame the current AI era as a continuation rather than a departure from existing extractive digital practices. I think AI tools haven't created a new problem as much as they have expanded an old one. They have inherited a broken foundation where data privacy and content were never truly prioritized, and how they are scaling it with far more rich complexity and opacity.

What's different though, I think, is the scale and the stakes. With AI, personal data isn't just used to target ads or sell products. They are used to train models that generate content, make decisions, or even predict our behavior. And for most of the time, people aren't aware their data has been parts of that pipeline. There is little to no informed consent, like we said, and there's no way to opt out. So it raises difficult questions about autonomy, visibility, and agency, and how can we participate in digital life without being involuntarily conscripted into training someone else's proprietary AI model. So when we talk about data, it is input, but it's also about the label, the identity, the context attached to that data, all of which are being erased in the name of efficiency or innovation.

So I think, to this question, I would say: AI is not operating in a vacuum. I think it's just picking up where earlier digital systems left off. Only now with fewer boundaries, less oversight, and a lot more power. That's why the conversation can't just be about technical performance alone. We need to include ethics, transparency, and the right to say no.

Karen: Yeah, absolutely. Do you know of any company that you gave your data or content to that made you aware that they might be using your information for training an AI or ML system? Or did you ever get surprised to find out that somebody was going to use it for that? Like you said, the terms and conditions can be very obscure and not really a good mechanism for consent.

Rebecca: Mm-hmm. I didn't know, but I read the recent update from meta about using all public data from their platforms to train their AI models. And it was just unsettling because I think for me, is the disparity in how they are handling this globally, right?

So you have people in certain regions, like the EU under the GDPR, where they are given clear and actionable ways to opt out. But then for the rest of us, especially here in Africa, there's been no such transparency or mechanism provided. I haven't come across any region-specific process for users in African countries to opt out or even be properly informed about what's happening with the data.

I know that South Africa does have POPIA, which provides some data protection rights and uses an opt-in content model. It doesn't seem to be giving us the same clear opt-out mechanisms for AI training that European users received. And also, the law seems to exist on paper, but then practically, the implementation around AI training is kind of lagging behind. So it's not at the same level than what we see in region with stronger regulatory frameworks.

So it feels like, once again, we are just being swept into these massive data policies by default, without consultation or clarity. And even when information is technically available, it's often buried in these formats that aren't easily digestible, and tucked away in help centers. So again, it's just so much jargon that I don't think most of us understand. And it keeps reinforcing the feeling that data from the global south is just an unprotected resource that can be mined without consequence.

And I think there is something that I need to mention. As much as we can accuse Meta and say that they are kind of just using these terminologies or this way of speaking that we might not understand, I do think that as users in this part of the world or any other part of the world, there is a level of responsibility when it comes to digital literacy. It plays a crucial role here too, because as users, we need to be able to ask the right questions to find answers that actually help us. So there's this level of responsibility on our path to push back when possible because the companies are capitalizing on the fact that most people don't fully understand what's happening with this data.

And at the same time, it's not that simple, right? Because if you think about someone in the DRC who's using Instagram and Facebook primarily to escape their precarious realities, for them, there's an element of "I don't really care about all that when I don't know where my next meal will come from."

And that's completely understandable. There's a lot to unpack here, like I usually say it, in terms of privilege and access when we talk about digital awareness. But the bottom line remains that companies like Meta and others need to take global users seriously. Not just those covered by Western regulatory frameworks, but also the remaining ones. And we need to move away from these systemic inequalities. I don't think we're asking for special treatments. We're just asking for the same respects and agency that users in other regions of the world receive.

Karen: Yeah. One thing that I've been hearing a lot and learning a lot more about, as I've been doing these interviews with people in other regions: the people who are covered by GDPR in the European Union are fairly well protected, much better than in other countries. In the US we actually have very little protection. When Meta announced last summer that they were going to start using our information and that they had this whole complicated opt out mechanism, I went through and opted out. And they basically said, "No, we don't have to respect your request, so we're not going to."

Rebecca: Oh, wow. Wow.

Karen: Okay, well, in that case, I'm going to delete my account. So I deleted all my content.

Rebecca: Oh, wow.

Karen: Yeah. So it does vary quite a lot, and I'm envious of the people who are covered by something like GDPR. And Japan has had some good privacy protections for a while. But a lot of the regions of the world do not have this protection. Then it's really a matter of business ethics. They respect us when they're forced to by regional laws. But otherwise, they say, "no, we're just going to make more money off of you."

I don't know if you heard the latest thing that Meta is doing. I just read this. I don't use Instagram or Facebook any more. But they have this new feature they call cloud processing, and they're offering to people, "Hey, you'll turn on this cloud processing feature and we'll select pictures from your camera roll on your phone, and upload them to offer you some features." Like, okay, do people realize that they would be giving someone access to all the photos on their phone? You know, personal pictures, screenshots of confidential information. They're not exactly making it obvious, but that's what it would be. And that's just not right.

Rebecca: That is not right. And that's exactly, I mean, wow. As you were talking, I was trying to picture it. I mean, I hope they haven't started rolling this out already here or back home in the DRC. But, I mean, people don't even pay attention to understanding what that would mean, right? Like you said, they'll just see, "oh, it's a new feature on Instagram or Facebook. Yeah, let me just click on it without clearly understanding the implications". And wow. And oh.

Karen: There's someone I follow on data privacy, on Substack -- Luiza Jarovsky, if you haven't seen, she usually calls these things out pretty quickly. And Ravit Dotan also. They are really good at highlighting these things. They will go in and read what these terms are and say, "Okay, do you all realize that this is what this means?"

And I saw that post from her and I thought, "Well, okay -- makes me glad that I never did Instagram and that I really don't do Facebook at all anymore." I don't even have their apps on my phone, so they have no chance of getting my camera roll. But the fact that they feel like that's an okay way to operate is just jarring.

Rebecca: Yeah, and like I said, I think they are capitalizing on the ignorance part of the majority, the critical masses, right? I mean, you are closer to this understanding because I believe you're exposed to this concept and it's just you are, there's proximity, you know. But then you look at other regions of the world where they're not even aware of implications. And for me, that's heartbreaking, that these businesses will use that ignorance to continue with the operations, the extractive systems. That's really heartbreaking, to be honest. At this point it's kind of hard to be able to come up with solutions. Or I would say solutions is the only word I can come up with, to bridge this gap for communities in the African region. Because they will tell you they don't really care, but they don't understand it at the moment right now. It's only a matter of time before they can get that understanding.

So then, as someone who's aware of this, you're kind of in the middle of, what do I need to do to get you to a point where you understand this, without sounding like someone who's trying to annoy you? And it has always been the spot that I'm in, right? You do have these people telling you "You're paying attention to things that don't matter to us." And I'm like, "Yeah, because I kinda understand where this is coming from and I understand where you are. But we need to be able to do something about it." And sometimes I do feel completely powerless. But yeah, eventually we will find a solution.

Karen: One thing that I try to look for and try to focus on is where there are alternative solutions. Like, you mentioned having an artistic side. A lot of people have talked about how people's artwork and photography have been stolen. But there are competing applications that are coming out now, like there's one called Cara, C A R A dot app, and other apps that say they are building themselves on a commitment that they will not exploit your content, your pictures, your images.

We may feel powerless in a lot of cases, but I think we have more power maybe than we realize, or more power than the people doing all the hype want us to think we have, right? Because we can say no. We can use something other than Instagram for our portfolios, and things like that.

Rebecca: Yeah, definitely. If you have the names of alternatives, I would definitely love to get them, really, for a matter of sharing them with my communities too. I think, like you said, the companies, all the actual apps that we know, the platforms that we know right now, they have kind of built these trusts, weirdly enough, with the communities, right? So there's always this skeptical attitude towards something that is new. I mean, "Is this as powerful as Instagram? Is this as known as Instagram?" But if that's the best way forward, then I will definitely try and talk about this to them, and try and see how we can introduce tools that are much more responsible and ethical.

Karen: Yeah, absolutely. I don't know if there's a central list somewhere. Now I'm really curious to see if I can find a list. And I don't know that I should be the one to curate it, but I do know of some alternatives, and if I don't find a list, then I guess I'll start one.

Rebecca: Oh, yes. You do such a pretty good job, Karen, I have to say. So I trust you.

Karen: Well, I'll see. I'd much prefer to find one that someone else is keeping up. And there are a lot of organizations that are working toward this. Like there's the Algorithmic Justice League and a lot of other groups that are focused on looking for cases where bias is a problem. And so I do know some alternatives, like for Twitter, the obvious alternative is Bluesky.

Rebecca: Yeah.

Karen: And a lot of people have migrated there, including me.

Rebecca: I mean, I opened my Twitter account I think in 2011, and I've never used it since. It's just a lot for me. I have downloaded Bluesky. I just need to be much more active and see what it looks like.

Karen: Yeah. So there are some alternatives. And I'll see what I can find. That would definitely be a service, I think, if there isn't such a list, it would be a great service to have one with ethical alternatives for different types of applications.

Readers: do any of you know of such a list of ethical tool alternatives? If so, please share here!

Karen: You mentioned about the phishing, with your contact information being stolen when you applied for a job. Is there any other example you can think of where someone has stolen your information and used it and caused trouble for you? Like invading your privacy, or if it's ever cost you any money, that sort of thing.

Rebecca: Not that I know of, to be honest, and I hope it never happens. At this point I haven't been through a situation like that.

Karen: Yeah, let's definitely hope it stays that way, right?

Rebecca: Mm-hmm. Yeah.

Karen: Yeah, so, last question, and then we can talk about whatever you want. Everything that we've talked about pretty much illustrates why public distrust of AI and tech companies has been growing recently. I'm wondering what you think is the most important thing that these companies would need to do to earn and then to keep your trust. And if you have any specific ideas on how they could do that?

Rebecca: Yeah. When I think about trust, I think about the families displaced by mineral mining in conflict zones in the DRC, the content creators whose work trains models without consent, the communities whose knowledge gets scraped and commodified.

So the most important thing, in my humble opinion, that AI companies need to do is to interrupt this cycle of unaccountable extraction. Trust shouldn't just be about better privacy policies or ethic boards. It should also be about structural powered redistribution. Right now, most of us have no say, like we have established, we have no say in whether our data, voice, cultures, or even the minerals from our lands become inputs for AI systems. There is no notification, there is zero compensation, there is zero recognition. And rebuilding trust will mean centering affected communities in decision making. The people who bear the real costs, whether that's a Congolese miner, or a content creator, or a data worker, they should have power, actual power in shaping how AI develops. Not just token consultation.

And this means the full supply chain remains visible and these companies are able to disclose not just the carbon emissions, but where the minerals were mined, who assembled the chips, who moderated the toxic content and under what conditions. And I think if the human costs are still hidden, then there is no such thing as clean AI.

And it also means that these companies will need to build consent into the foundation, instead of just optimizing for convenience. Because we need systems where communities can meaningfully participate in or opt out of AI development that affect them, especially the marginalized groups.

And frankly, it means that the companies need to accept legal accountability, not just in terms of ethical promises, but the real frameworks with real consequences when extractions cause harm. As hopeful as this sounds, I think we are still very far from seeing it play out in any meaningful way, but I remain hopeful that at some points we might see this happen.

Karen: Yep. I think we have to hold onto our hope, right?

Rebecca: Yes. That's the only thing we got.

Karen: Well, Rebecca, thank you so much for joining me for this interview and for covering these standard questions. I'd like to hear what other thoughts you have about AI, if there's anything else that you'd like to share with our audience.

Rebecca: Yes. I hope that this makes it to the audience, but also more especially the communities that are often excluded from shaping tech. And I would like to encourage those to stay curious and ask the questions. I would like to encourage every single one of us to keep pushing back against the systems that aren't built with everybody in mind.

I think for the excluded communities, I would say that they do not always have the know-how about the resources, but they do have perspective and experience, and that matters, and that should be included in these conversations.

So I do appreciate you having me here, and I hope that I brought a good level of light in terms of the region that I come from and my communities and those like me that have always been excluded from these conversations.

As for what's coming next in terms of my personal work and the things that I do, I will be continuing to explore the intersections of AI and data and tech justice through my writing and community conversations, and I'll be sharing more soon through my Substack.

I am quietly building a few initiatives that aim to center African voices in the AI space. One of them is a project-based mentorship program for African women who want to grow in AI and data. And the project is grounded in purpose, not just to acquire technical skill, but it's about the community shaping whatever it is that we want to see as an outcome of this program. And I'm still at the concept refinement phase and currently collecting insights from potential participants who have shaped the program meaningfully. And there's a link that is dropped here for anyone who would like to participate and provide insights. [link for mentorship program]

And I'm also developing a knowledge hub focused on data and take on AI through an African lens that is drawn from my TAIS interviews. TAIS stands for The African Innovators Series, interviews that I have been running here on Substack. It's about making complex ideas accessible, contextual, and rooted in lived African realities. You can also find a link to that initiative here. [link to the interviews]

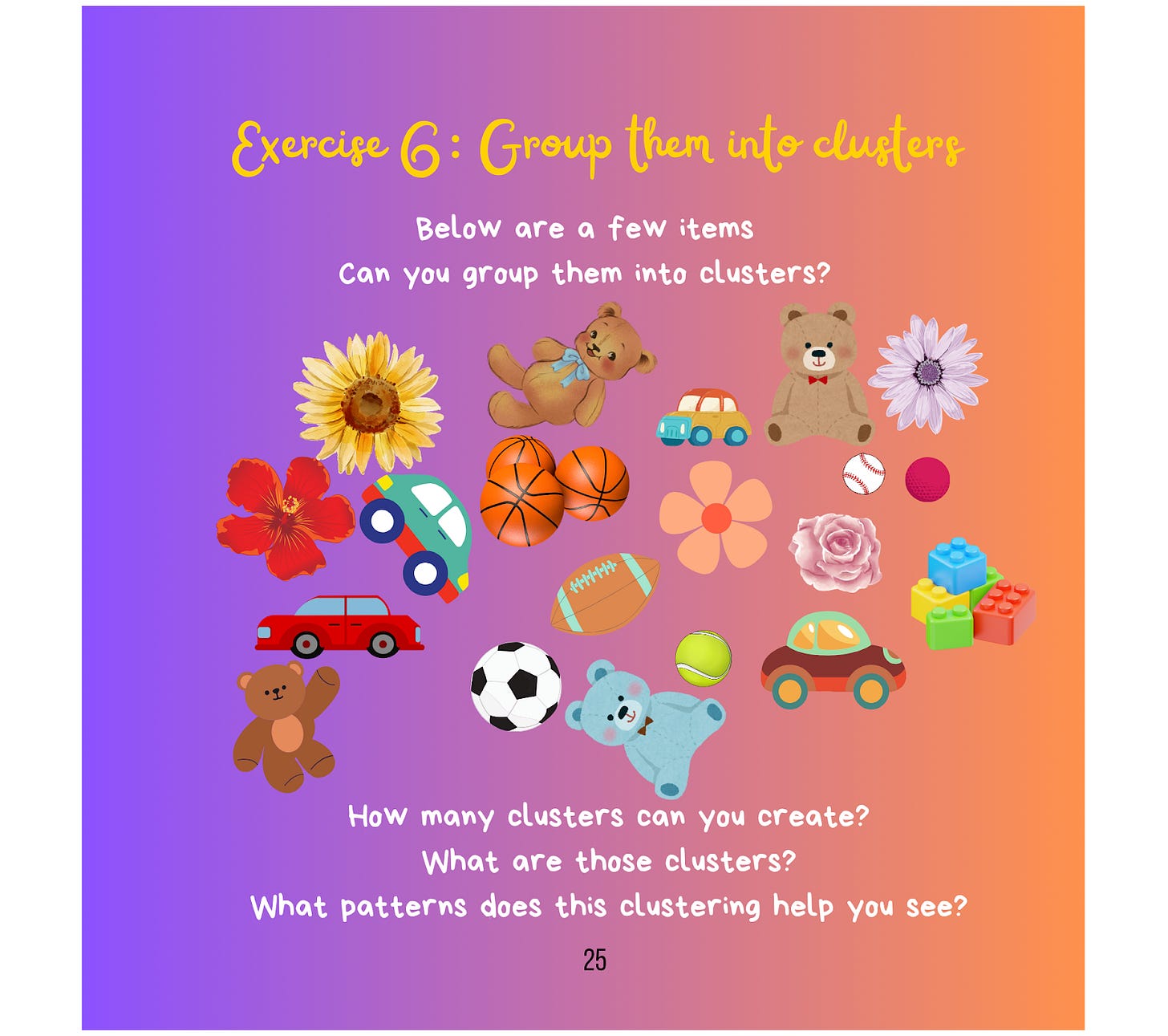

And on a more personal note, I've been supporting my daughter in her writing journey and she just finished writing her first book about data. She wants to have other kids explore data the way she's, and she came up with the idea to create a fun, engaging book for kids, to help them be empowered in terms of data literacy. So we published the book independently on Amazon KDP. [link to the book on Amazon]

The long-term dream is to get this book into the hands of as many children across the continent and the world as possible, and we would appreciate support for the cause because every child deserves these critical thinking skills.

So please consider buying one for your kids or sponsor a book for a child out there who needs it most. And the link is also dropped here for you too, to purchase and or to donate the book to a kid out there. [sponsorship link] Thank you.

Karen: You're very welcome, Rebecca. I think it's so awesome that your daughter wrote this book. She's what, nine years old?

Rebecca: She is nine. She's nine. This is a journey that we started since last year. Really just like doing laundry, folding the clothes, and it's always been such a messy experience. So then I was like, "You know what? Let's cluster because I'm not liking this." And that's actually how it started. And they kept asking questions. And she's such an investigator, and I'm like, “This is something that I need to invest into.” So I started teaching her about data and what it is, what it looks like, what can we do with it, and she just gained the interest.

Karen: Yeah, that's awesome. Because data literacy is just so important for all of us to understand what it is. And just at a high level -- we don't all have to be data scientists and write machine learning models, right? But we should understand enough to ask good questions. And starting with kids, I think the age range for the book is, I want to say 6 to 11, or something like that?

Rebecca: Yes. It is 6 to 11. Yes.

Karen: Yeah. And that's awesome. I've got one grandchild who's in that age range and I've definitely got to get that book for her. Her parents are pretty savvy, but if she gets it and her friends start getting it, that would be a good thing.

Rebecca: Yeah.

Karen: Thank you. Thank you so much. I think it's just awesome that you did that with her and that she wrote her first book. That's just incredible. And I think that deserves wider support.

And you mentioned too about your mentoring program. We have this She Writes AI community that we're building up on Substack. And we're over 450 people. And you were our first and definitely, hopefully not our last, from Africa.

Rebecca: Hopefully not, hopefully not.

Karen: But that would be maybe a great place to find mentors from all different areas around the world. I think we're over 50 countries now. So maybe when this interview comes out, or even before then, we could post something there in the subscriber chat and see if anyone's interested in getting involved.

Rebecca: Yes, definitely. I love the idea. Yeah. Great.

Karen: All right, well, I know it's late for you, Rebecca, so thank you so much for staying up late and doing this interview with me. Really great insights. And we'll get your voice out there so more people can hear these perspectives from you.

Rebecca: Thank you so much, Karen. I really do appreciate this, and I enjoyed the conversation.

Karen: Yes, me too.

Rebecca: I was trying to read my notes, but I improvised a lot. But I think that is because I felt comfortable somewhere along the conversation. So thank you very much for this.

Karen: You're welcome!

Interview References and Links

“Exploring Data With Zee” (buy on Amazon, sponsor a book)

Rebecca Mbaya on LinkedIn

Rebecca Mbaya on Substack

About this interview series and newsletter

This post is part of our AI6P interview series on “AI, Software, and Wetware”. It showcases how real people around the world are using their wetware (brains and human intelligence) with AI-based software tools, or are being affected by AI.

And we’re all being affected by AI nowadays in our daily lives, perhaps more than we realize. For some examples, see post “But I Don’t Use AI”:

We want to hear from a diverse pool of people worldwide in a variety of roles. (No technical experience with AI is required.) If you’re interested in being a featured interview guest, anonymous or with credit, please check out our guest FAQ and get in touch!

6 'P's in AI Pods (AI6P) is a 100% reader-supported publication. (No ads, no affiliate links, no paywalls on new posts). All new posts are FREE to read and listen to. To automatically receive new AI6P posts and support our work, consider becoming a subscriber (it’s free)!

Series Credits and References

Disclaimer: This content is for informational purposes only and does not and should not be considered professional advice. Information is believed to be current at the time of publication but may become outdated. Please verify details before relying on it.

All content, downloads, and services provided through 6 'P's in AI Pods (AI6P) publication are subject to the Publisher Terms available here. By using this content you agree to the Publisher Terms.

Audio Sound Effect from Pixabay

Microphone photo by Michal Czyz on Unsplash (contact Michal Czyz on LinkedIn)

Credit to CIPRI (Cultural Intellectual Property Rights Initiative®) for their “3Cs' Rule: Consent. Credit. Compensation©.”

Credit to for the “Created With Human Intelligence” badge we use to reflect our commitment that content in these interviews will be human-created:

If you enjoyed this interview, my guest and I would love to have your support via a heart, share, restack, or Note! (One-time tips or voluntary donations via paid subscription are always welcome and appreciated, too 😊)

Share this post