Ethical AI Standards by Region [PART 2 Supplement in "Unfair use?" series]

Supplement to Part 2 on ethics of generative AI for music, summarizing international, regional, and country-level initiatives on AI ethics. Ongoing work in progress.

Welcome to this supplement for the second article in our series on ethics of generative AI for music, and how it affects PRODUCTS and PEOPLE!

This article series is not a substitute for legal advice and is meant for general information only.

In PART 2, we tackled HOW each stakeholder group is affected by ethical concerns (including ownership rights and model biases). This page is a supplement to Part 2 which details international, regional, and country-level initiatives on AI ethics frameworks. Although not specific to music, they are still highly relevant.

Table of Contents for this post:

ETHICAL STANDARDS

Where industry standards and regulations have been established, they can provide insights into what the people in those regions support and believe. Several excellent regional and international guidelines have been emerging in the past few years on ethics of artificial intelligence and how to design AI-based systems which are inherently ethical.

International

Global Views and Initiatives

A 2019 report from Health Ethics and Policy Lab at ETH Zurich reported that global consensus was beginning to form that AI should be ethical and that the top 5 key ethical principles were1:

transparency

justice and fairness

non-maleficence

responsibility

privacy

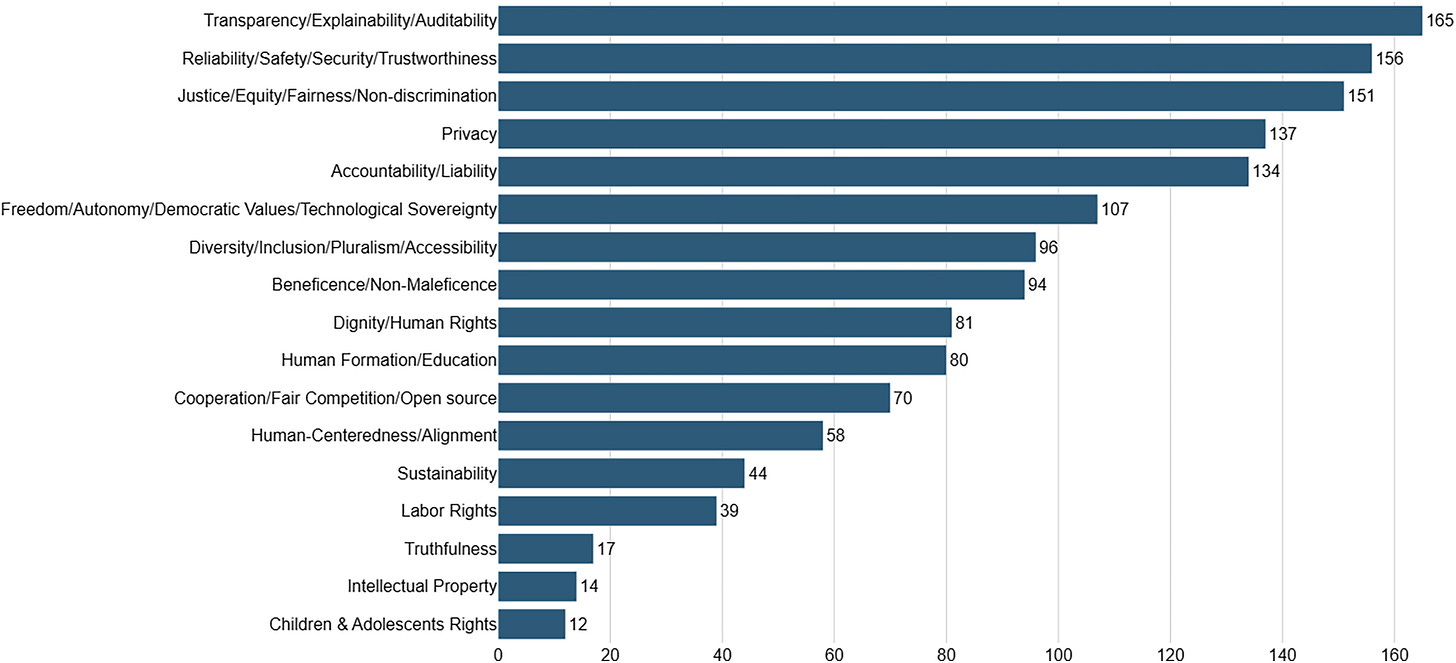

In a comprehensive Oct. 2023 “meta-analysis of 200 governance policies and ethical guidelines for AI usage published by public bodies, academic institutions, private companies, and civil society organizations worldwide”, Nicholas Kluge Corrêa and colleagues concluded2 that the top five most cited AI ethics principles are:

transparency/explainability/auditability,

reliability/safety/security/trustworthiness

justice/equity/fairness/non-discrimination,

privacy

accountability/liability.

A complete list of the principles and frequency of citation is shown in the following figure from their report:

In the area of AI ethics, IEEE has spearheaded both IEEE Std 7000-20213 and a 2019 report entitled “Ethically Aligned Design”4. Some countries and regions have reported that they are basing their AI ethics frameworks on IEEE’s Ethically Aligned Design.

Parallel global initiatives are being led and tracked byACM, Alignment Forum, and DPGA (opensource.org). AI-specific organizations such as TheAssociation.AI5 are also demonstrating leadership in this area.

United Nations

One recent notable global milestone is adoption by the United Nations of a resolution on AI on March 21, 20246. The focus is on “safe, secure, and trustworthy AI systems”, excluding in military domains. The “resolution was designed to amplify the work already being done by the UN, including the International Telecommunication Union (ITU), the UN Educational, Scientific and Cultural Organization (UNESCO) and the Human Rights Council.”

In addition to respect for IP rights (including copyright), the UN resolution calls on AI for addressing “disability, gender, and racial equality”.

For a quick deep dive into these equality aspects, see our March 24 post “A step towards global "safe, secure, and trustworthy AI"7.

More context on the UN AI resolution is available in ’s newsletter8. If you’re interested in learning even more about the UN AI resolution, check out this upcoming April 15 event by 9.

Europe

In their Dec. 2023 article10, Suzanne Brink and Holly Toner noted that the top 5 themes identified by Kluge Corrêa et al in the 200 guidelines they reviewed were all “reflected in the [proposed] EU AI Act”, and pointed out that the impact on companies using AI would not be limited to just EU boundaries.

“The EU AI Act will have a wide geographical reach; it will impact anyone wanting to deploy an AI system to the EU market, from both inside and outside the EU.”

The EU AI Act passage on March 13, 202411 is therefore a key milestone for global AI ethics, and not only for the EU.

Africa

For an overview of how AI governance work in Africa are leveraging EU progress on governance frameworks, see this post12 by Ruth Owino, PhD, and check out the African Academy of AI13.

This Feb. 21, 2024 writeup by lawyer Wilhemina Agyare14 provided good context for major countries in Africa. More recently, this March 15, 2024 article in MIT Tech Review on Africa’s AI policy15 reported:

“On February 29, the African Union Development Agency published a policy draft that lays out a blueprint of AI regulations for African nations. The draft includes recommendations for industry-specific codes and practices, standards and certification bodies to assess and benchmark AI systems, regulatory sandboxes for safe testing of AI, and the establishment of national AI councils to oversee and monitor responsible deployment of AI.”

As of March 2024, only 7 African nations (Benin, Egypt, Ghana, Mauritius, Rwanda, Senegal, and Tunisia) had defined national AI strategies, and none had yet implemented AI regulations. However, 36 of 54 African countries have established formal data protection regulations.16

South Africa subsequently published a National AI Policy Framework in late 2024 17.

Asia/Pacific

(TO DO: add brief notes on APAC as a region and any region-wide coordination)

Australia

Australia’s AI Ethics Framework (https://www.industry.gov.au/ai-ethics-framework) originated in 2019 and has continued to evolve. Their approach is based on the IEEE’s initiative on Ethically Aligned Design (https://standards.ieee.org/ieee/White_Paper/10594/, https://standards.ieee.org/ieee/White_Paper/10595/ ).

As summarized on this OECS page https://stip.oecd.org/stip/interactive-dashboards/policy-initiatives/2023%2Fdata%2FpolicyInitiatives%2F24350 the key (voluntary) principles of Australia’s AI Ethics Framework are:

Human, social and environmental wellbeing

Human-centred values

Fairness

Privacy protection and security

Reliability and safety

Transparency and explainability

Contestability

Accountability.

China

…TO DO: Add references

India

…TO DO: Add references

Japan

…TO DO: Add references

Singapore

…TO DO: Add references

South Korea

…TO DO: Add references

Latin America, South America, and Caribbean

Countries in these regions, including Argentina, Brazil, Chile, Colombia, Costa Rica and Mexico, are actively working together to define regional and national AI strategies since at least 202018. Columbia began its activities several years ago, as this 2021 summary describes19. They are now pursuing moves from being passive observers to influencers.

This IAPP article20 provides a good overview of the initiatives in these regions, specifically the “Santiago Declaration”21 established in Oct. 2023.

Canada

Canada hasn’t been sitting still. Among other activities, they’ve published guiding principles22; launched the ‘second phase’23 of their Pan-Canadian Artificial Intelligence Strategy (phase 1 began in 2017) and introduced their first proposed AI bill C-27 in June 2022; spun up a collaboration in July 202324; and announced a new batch of signatories on their voluntary AI code of conduct25 in December 202326.

The Canadian code of conduct focuses on 6 key areas, all of which map to the principles in the UN AI resolution:

Accountability

Safety

Fairness and Equity

Transparency

Human Oversight and Monitoring

Validity and Robustness

USA

US president Joe Biden signed a sweeping executive order on AI on Oct. 30, 202327. However, there are still some open questions, and at this point, comprehensive national guidance is still emerging. Most federal effort to date has focused on organizations like NIST and their AI-RMF (Artificial Intelligence Risk Management Framework)28.

Like many other aspects of US politics and law, proactiveness on AI ethics and regulations varies by state. In the absence of a federal law on deepfakes, at least 15 of the 50 states are currently focused on addressing this one aspect of harmful, unethical behavior29.

One state in particular, Tennessee, is addressing the music niche. Their “ELVIS Act”, which was signed into law on March 21, 202430, is named for Memphis-based legend Elvis Presley. It aims to create an entirely new category of banned deepfake content: impersonating musicians and singers.

An excellent 2023 “Generative AI & American Society Survey” by the National Opinion Research Center31 gives us three great insights32 into US residents’ opinions on ethics of generative AI in particular:

A majority now “support paying artists and creators if their work is used to train AI systems” (57% agree and only 8% disagree)

Half “favor companies being more transparent about how AI models work and what data they use”

Two-thirds “favor labeling AI content” (content generated by AI)

Opinions in other regions may vary, however. (Results from similar surveys from other regions will be reported in future as they are discovered.)

CAUTION

This “Water and Music” article33 highlights a critical limitation of the major AI ethics frameworks: lack of focus on end users.

“One caveat of these widely available AI ethics frameworks is how they’re primarily focused on the research, development and business practices of AI. This is understandable, since the main responsibility lies with AI developers and policy-makers. But addressing ethical aspects of AI usage by end-users is essential.”

The article identifies the “Framework for Ethical Decision Making”34 by the Markkula Center for Applied Ethics at Santa Clara University as one potential approach.

P.S. As this article was ‘going to press’ in early April 2024, I discovered that Ravit Dotan has shared a great list of AI ethics resources, trends in US AI regulation, deep dives into AI regulation issues, a list of AI regulation trackers, and her newsletter, along with AI/tech lawyer Ray Sun’s global tracker of AI regulation. I didn’t have a chance to review all of their goodness before publishing this article, but I’ve now subscribed to both and I’ll definitely be digesting their content afterwards!

If you’re deeply interested in tracking AI regulations, you may want to check out this Responsible AI tracker which was just launched on March 25.

FOOTNOTES

Anna Jobin, Marcello Ienca, & Effy Vayena, “The Global Landscape of AI Ethics Guidelines”. Nat Mach Intell 1, 389–399 (2019). https://doi.org/10.1038/s42256-019-0088-2

Nicholas Kluge Corrêa, Camila Galvão, James William Santos, Carolina Del Pino, Edson Pontes Pinto, Camila Barbosa, Diogo Massmann, Rodrigo Mambrini, Luiza Galvão, Edmund Terem, Nythamar de Oliveira, Worldwide AI ethics: A review of 200 guidelines and recommendations for AI governance, Patterns, Volume 4, Issue 10, 2023, 100857, ISSN 2666-3899, https://doi.org/10.1016/j.patter.2023.100857

IEEE Std 7000-2021, “IEEE Standard Model Process for Addressing Ethical Concerns during System Design”, https://ieeexplore.ieee.org/document/9536679

IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems, “Ethically Aligned Design: A vision for prioritizing human well-being with autonomous and intelligent systems”, IEEE, 2019 (v1 PDF via hackernoon)

TheAssociation.AI (“The AI, Data, Ethics, Privacy, Robotics, & Security Association”), https://theassociation.ai

Announcement on UN Resolution on AI, https://news.un.org/en/story/2024/03/1147831

Karen Smiley’s Agile Analytics and Beyond newsletter post on bias and the UN resolution on “safe, secure and trustworthy AI”

Luiza Jarovsky’s newsletter post on the UN resolution on AI

African Academy of AI, https://aaai.africa/

“Reforming data regulation to advance AI governance in Africa”, Chinasa T. Okolo, Brookings Institution, 2024-03-15.

“Scaffolding for the South Africa National AI Policy Framework”, by Scott Timcke, Zara Schroeder, Drew Haller / Research ICT Africa, 2024-11-29.

SA Policy Framework is available at www.dcdt.gov.za/sa-national-ai-policy-framework.html